Ada’s Song

Ada’s Song is a ca. 10-minute work for mezzo-soprano, ensemble and an interactive Piano Machine system employing AI and ML processes. All sound in Ada’s Song is acoustic, as the Piano Machine – safe for use on all pianos - uses real-time performance data to cause an acoustic piano to sound.

This musical tribute to Ada Lovelace was commissioned in 2019 as part of an homage to the mathematician and programmer of the Analytical Machine - an early theoretical precursor of the modern computer - by the Barbican Centre during their Life Rewired series, which presented artistic perspectives on AI. It was premièred by mezzo-soprano Marta Fontanals-Simmons and the Britten Sinfonia, conducted by William Cole, and is dedicated to Marta, with whom I worked closely in developing the vocal part and its real-time generated audio cues. The event was curated by composer and researcher Emily Howard, and featured a panel on Ada Lovelace’s research and influence, which included Ursula Martin and other scholars.

Ada Lovelace is credited with the first published imaginings of AI applied in the creation of music: she chose composition as an example in which ‘mutual fundamental relations could be expressed by those of the abstract science of operations’, such that a machine could ‘compose elaborate and scientific pieces of music of any degree of complexity or extent.’ Ada’s Song takes inspiration from Ada Lovelace’s theories to further investigate the ‘complexity or extent’ that AI-generated music can attain, and its relationship to human agency and expressivity. It seeks to discover the distinct qualities that may be achieved beyond verisimilitude to human composition, and what music created using AI may be able to tell us about how humans learn to compose, how style is formulated, and the nature of expressivity.

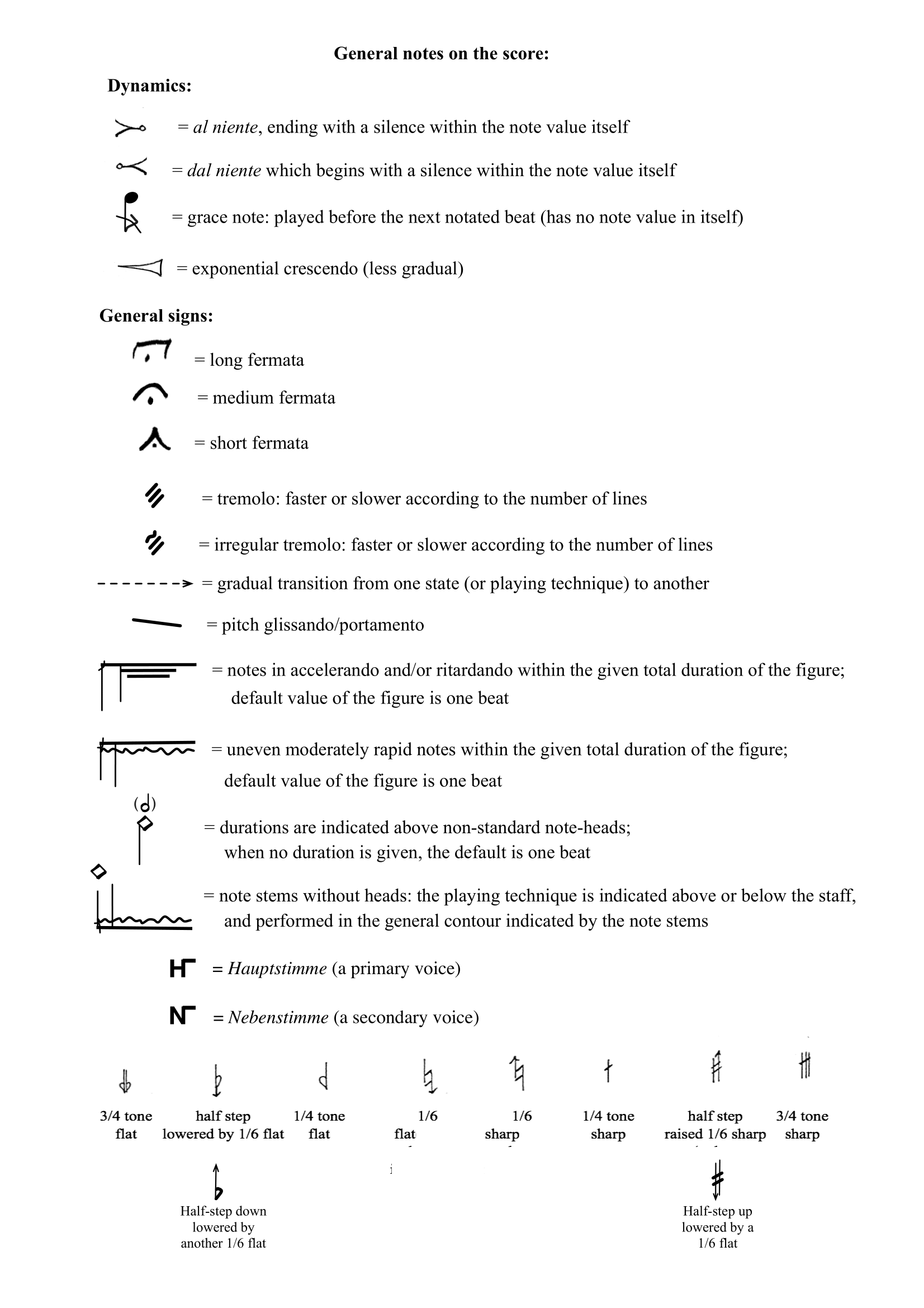

Ada’s Song explores AI-Assisted Composition (AIAC) these questions in several steps of its compositional process, as well as in its performance. The first step was to create a score, in a process related to my previous works interpreting existing musical repertoire. The ‘model’ composition, in this case, is Henry Purcell’s Hosanna to the highest, chosen in part because it is a lesser-known work of Purcell with a relatively small number of commercially-available recordings. I used target-based concatenative synthesis - using Python scripts from Benjamin Hackbarth et al’s Audioguide environment, which employs KNN - to match note-by-note segments of these recordings to a single instrumental transcription without voice. As the model work features a ground bass, this resulted in both a piling-up of segments and a reshuffling of the various instantiations of the melody over the repeated ground. The repeating harmonic progression was thus more or less preserved, while the sung text was completely reordered, creating new melodic relationships and combinations of words. I then mapped excerpts from Ada Lovelace’s letters onto the newly created, fragmented sung text. In order to create a score from the concatenated recordings, I used the IRCAM Orchidée environment to generate orchestrations for each segment, and then concatenated successive orchestrations in Max/MSP to audition the sequences as longer phrases.

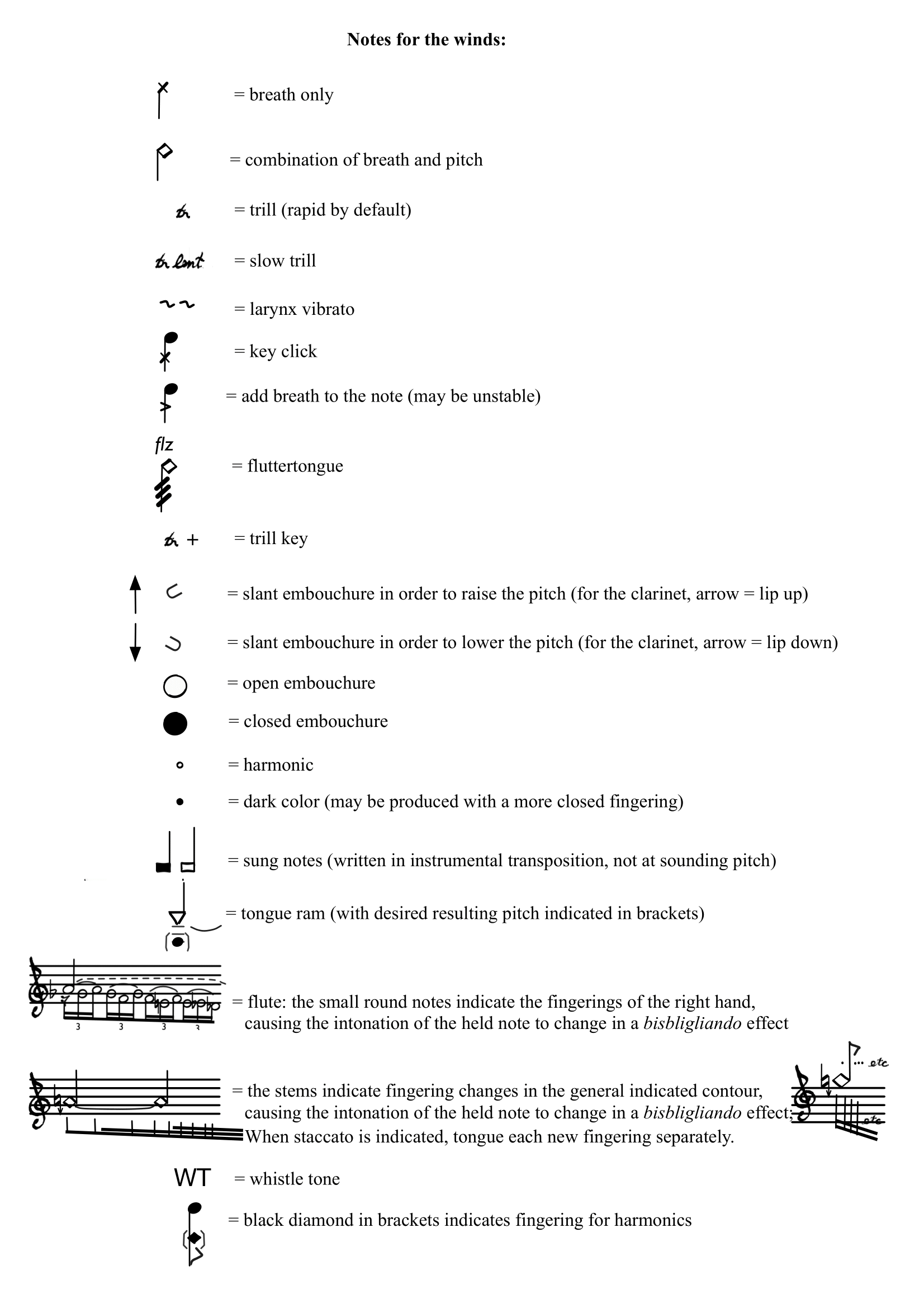

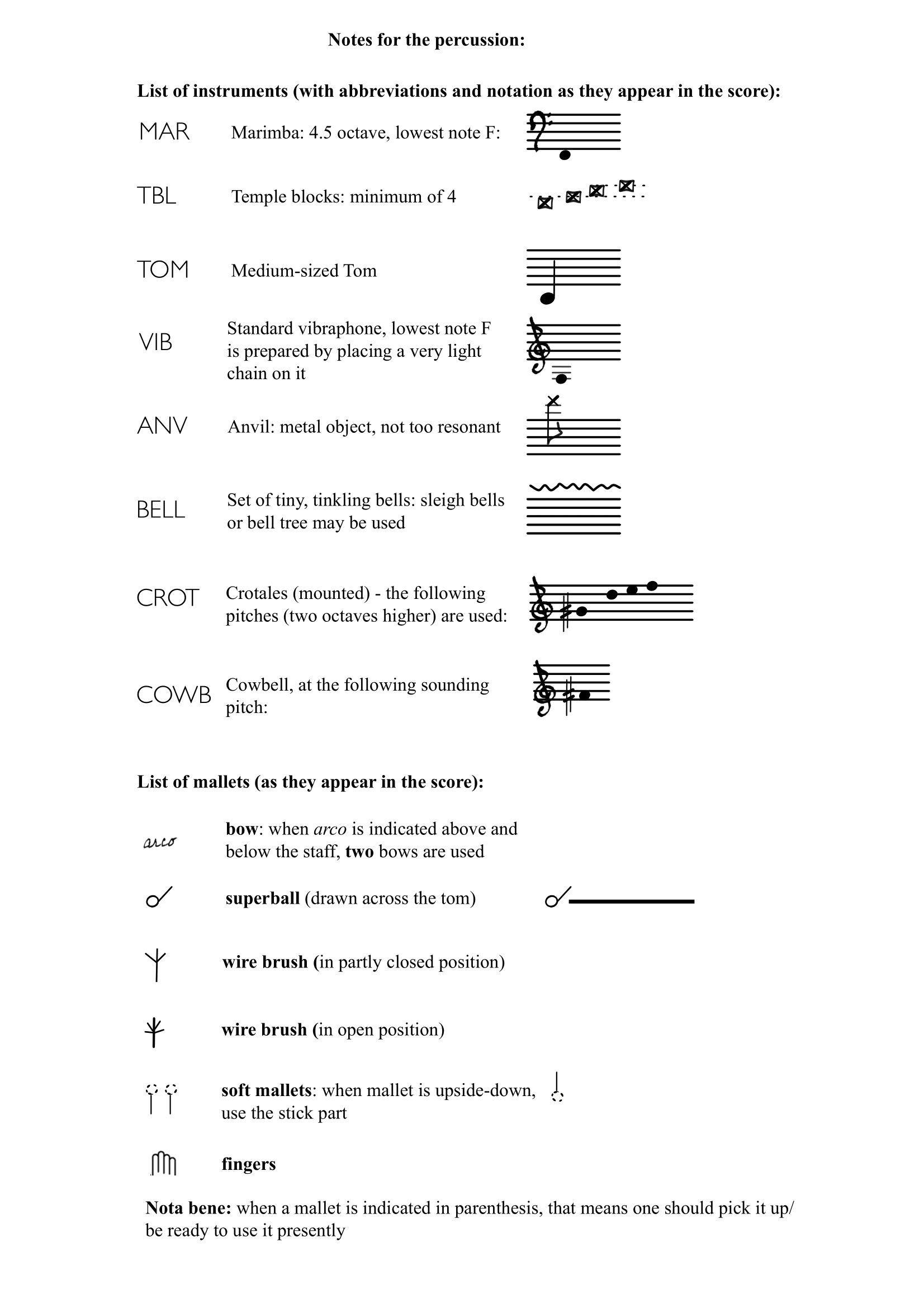

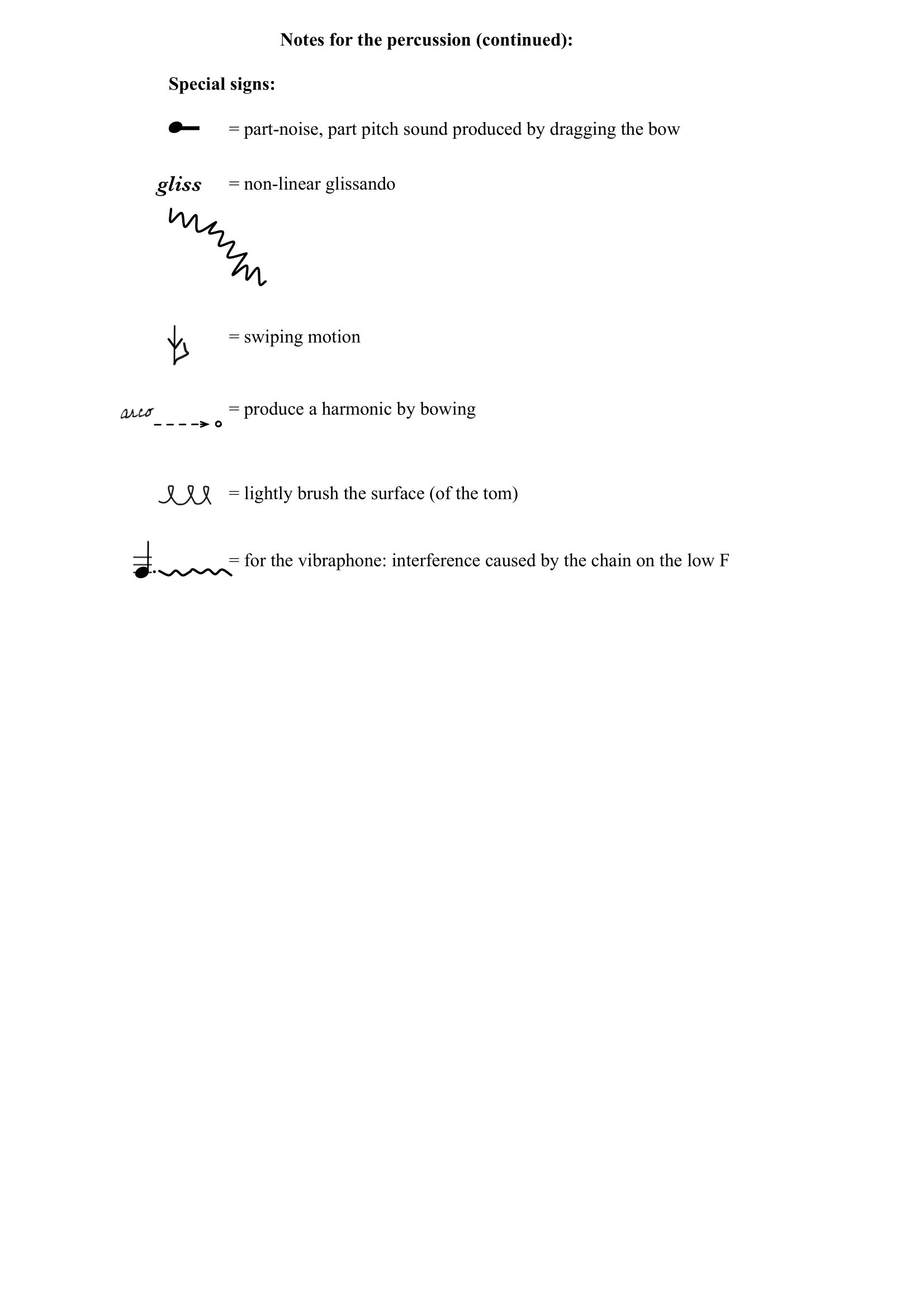

Ada’s Song also employs AIAC in the performance of the Piano Machine. Designed at Goldsmiths College in collaboration with Konstantin Leonenko in 2017, the Piano Machine plays the strings of the piano directly through mechanical, sustained vibration created by a set of motors and finger-like appendages controlled by microprocessors, thus creating dynamic control of notes over time, piloted by wireless OSC messaging. In a process similar to that of the composition of the instrumental parts, its material is generated by a transcription of the concatenated recordings. As for the concatenated recordings, the repetitive ground bass produces a consistently-repeating harmonic pattern. This creates a foundation for the expressive intervention of the real-time machine-learning processes described below.

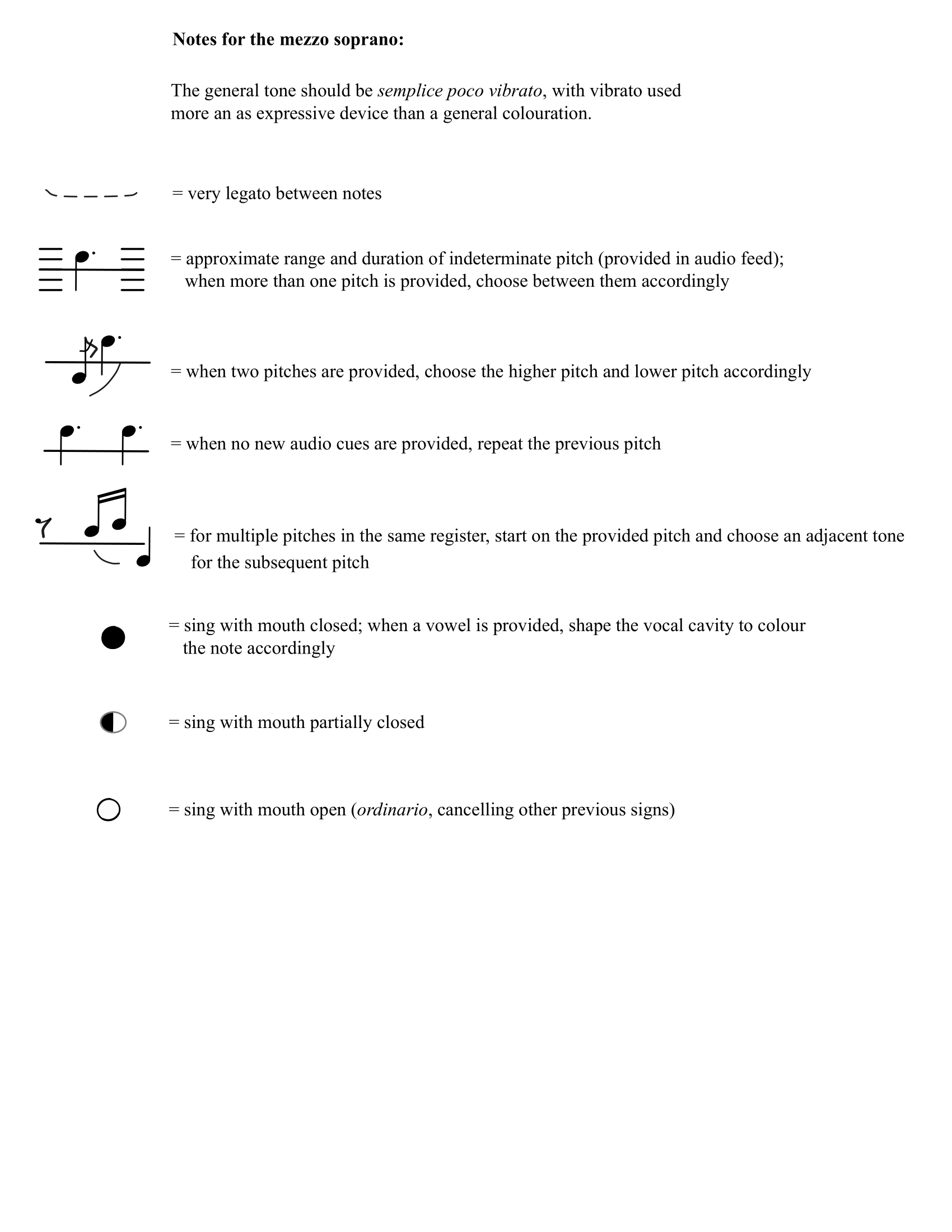

In an attempt to render the Piano Machine more expressive and responsive to the ‘human’ musicians’ performance, the repeating harmonic patterns performed by the Piano Machine are shaped by machine learning processes that ‘listen’ to the instrumentalists during the rehearsals and performance. These processes filter the reservoir of notes and amplitudes produced from the concatenated recordings, not only in relation the notes that been played, but how they have been performed. This is achieved by building up training sets of timbral data over the course of rehearsals. Thus, the Piano Machine inscribes itself into the expressive sonic world of the ensemble. The vocalist plays a key role in this process, as the ML system also generates new material to be sung in real time, communicated to the vocalist through audio cues. The vocalist’s interpretation of this new material in turn influences the piano machine’s ensuing responses.

Rendering human expressivity perceptible in machine-learning processes and highlighting the performer’s agency in determining the results of it is in keeping with Ada Lovelace’s theorization of the relationship between humans and that which we program: ‘The Analytical Engine has no pretensions to originate anything. It can do whatever we know how to order it to perform’ [Lovelace 1843]. Her recognition of human responsibility and agency is one of the many aspects of her work to be brought to bear in our present discourse on the creative capacity and possible applications of AI.

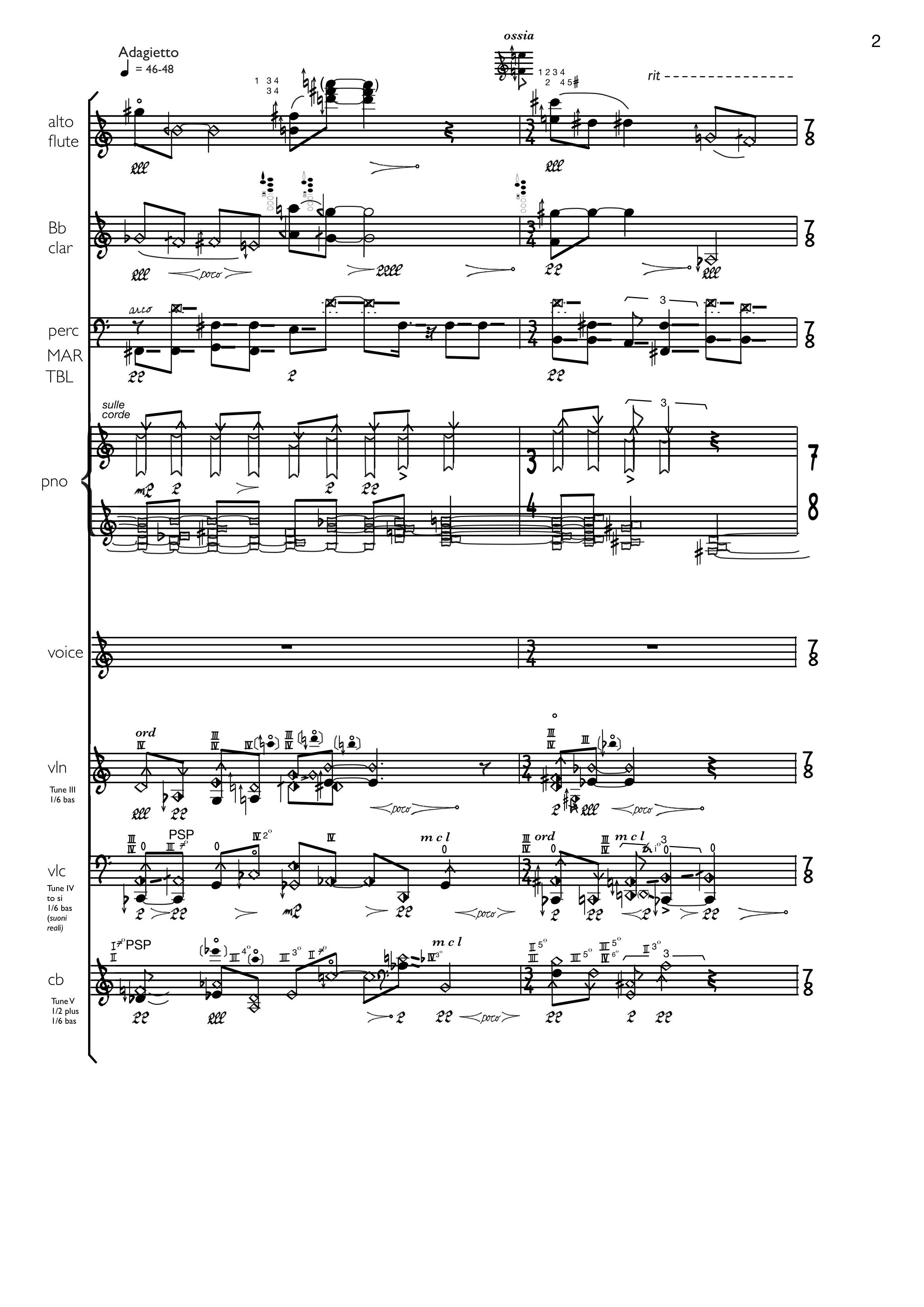

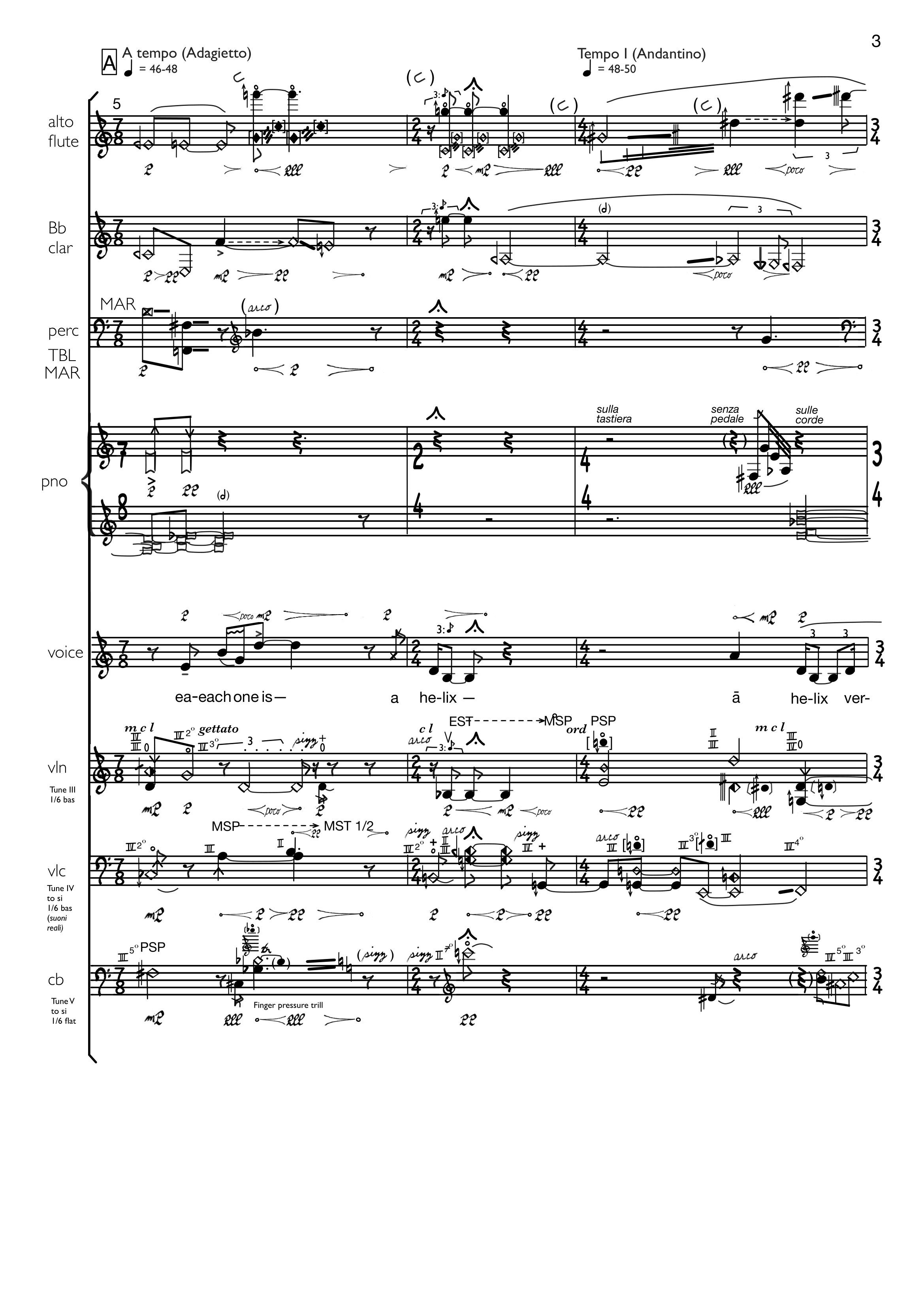

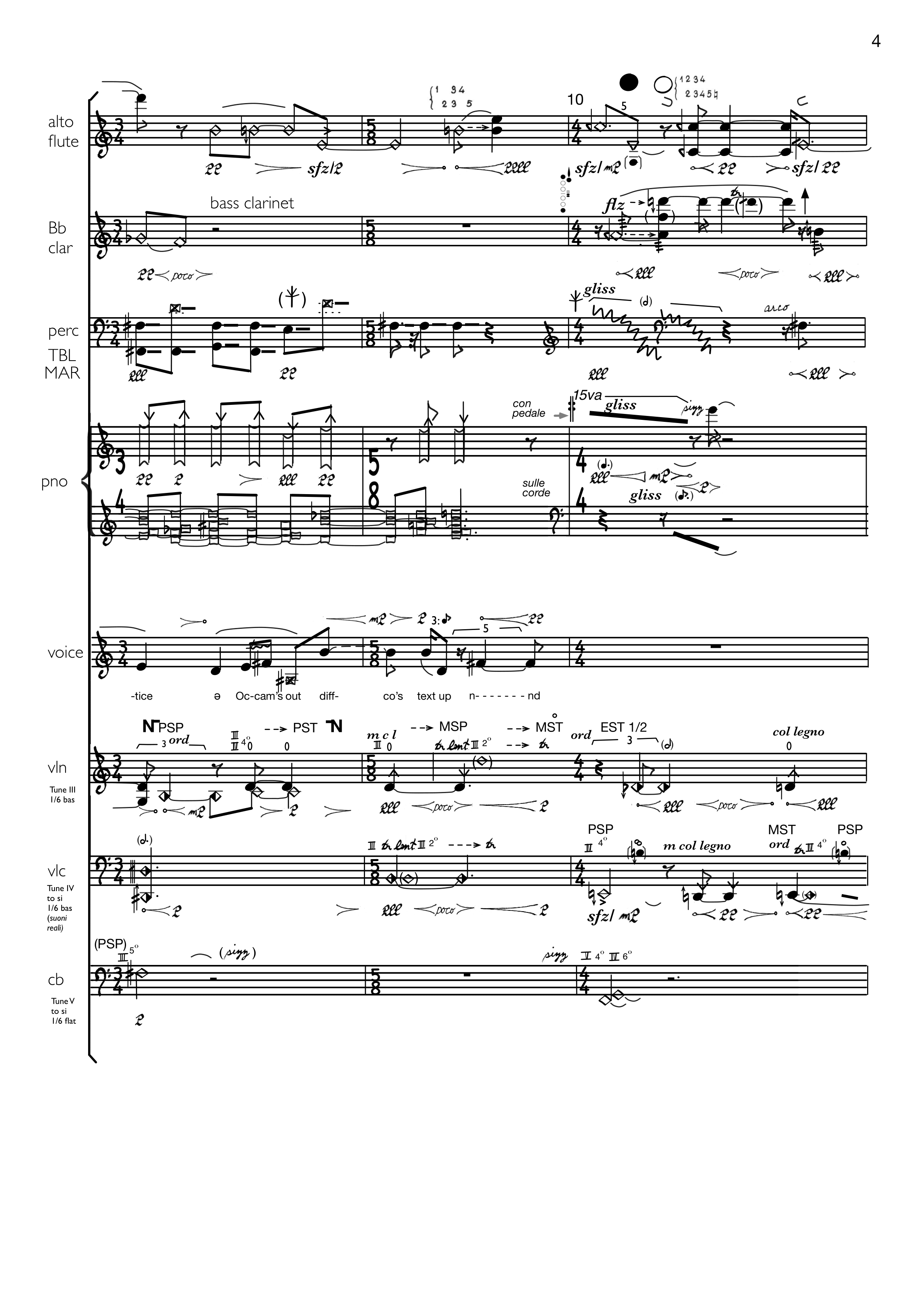

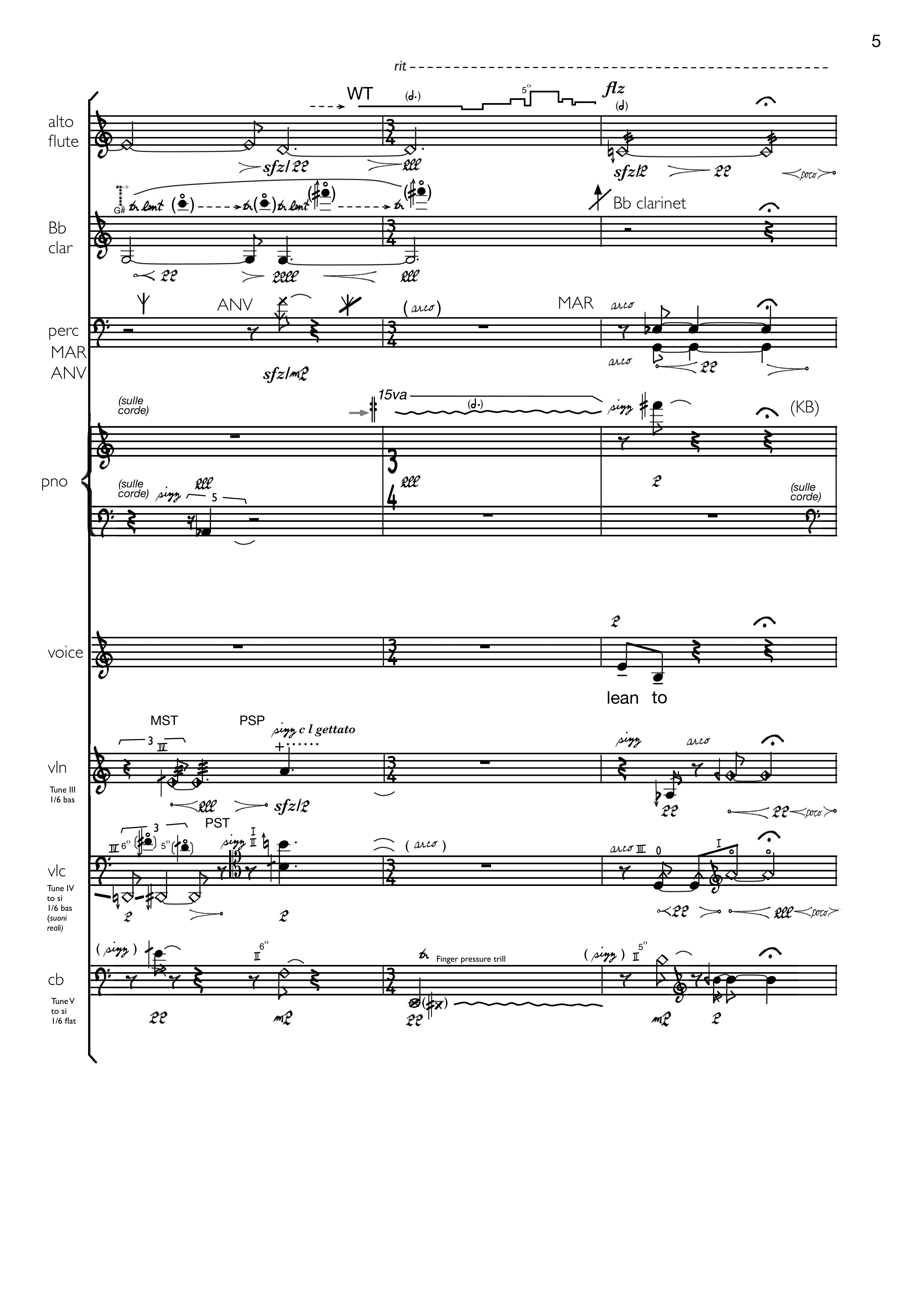

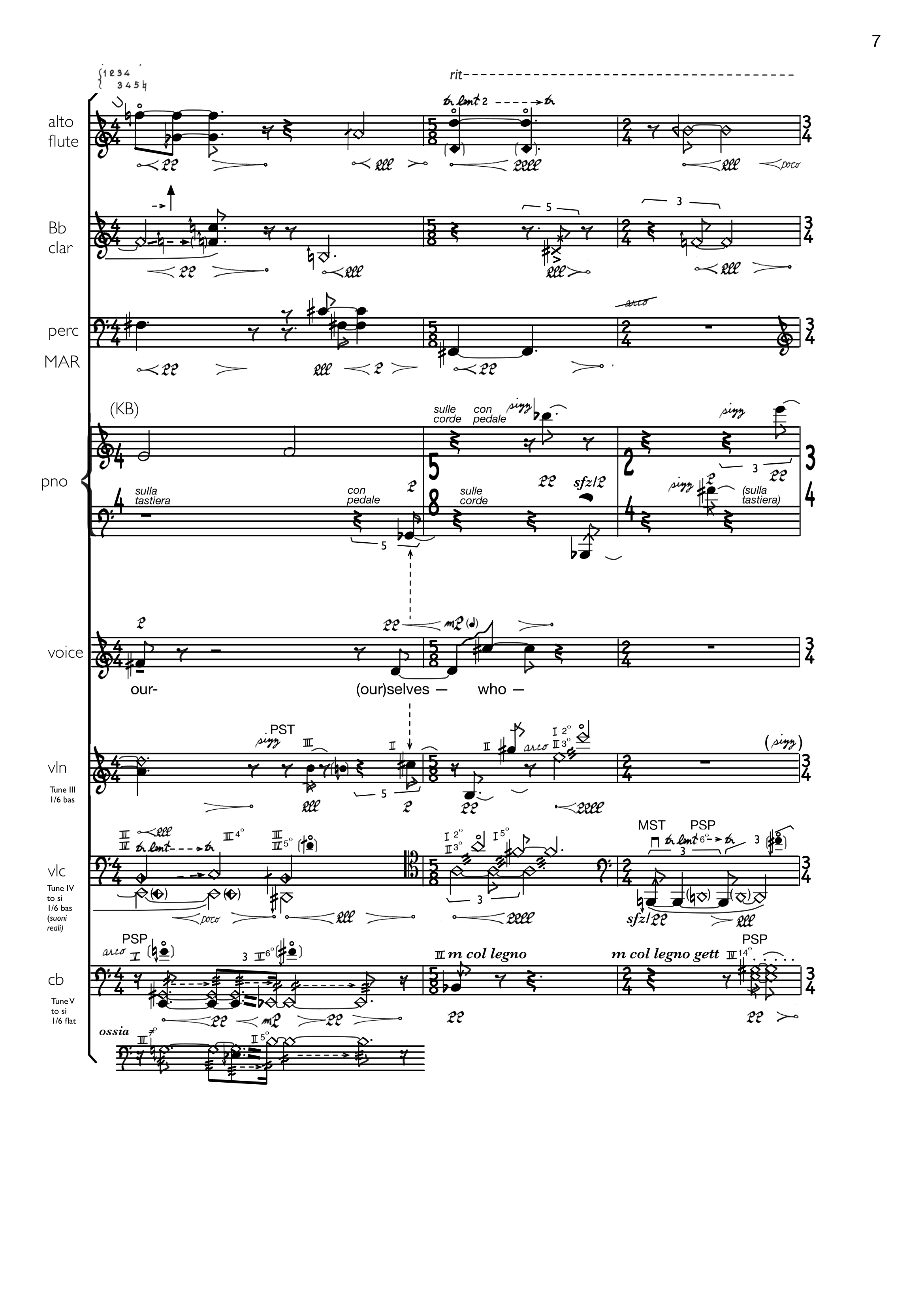

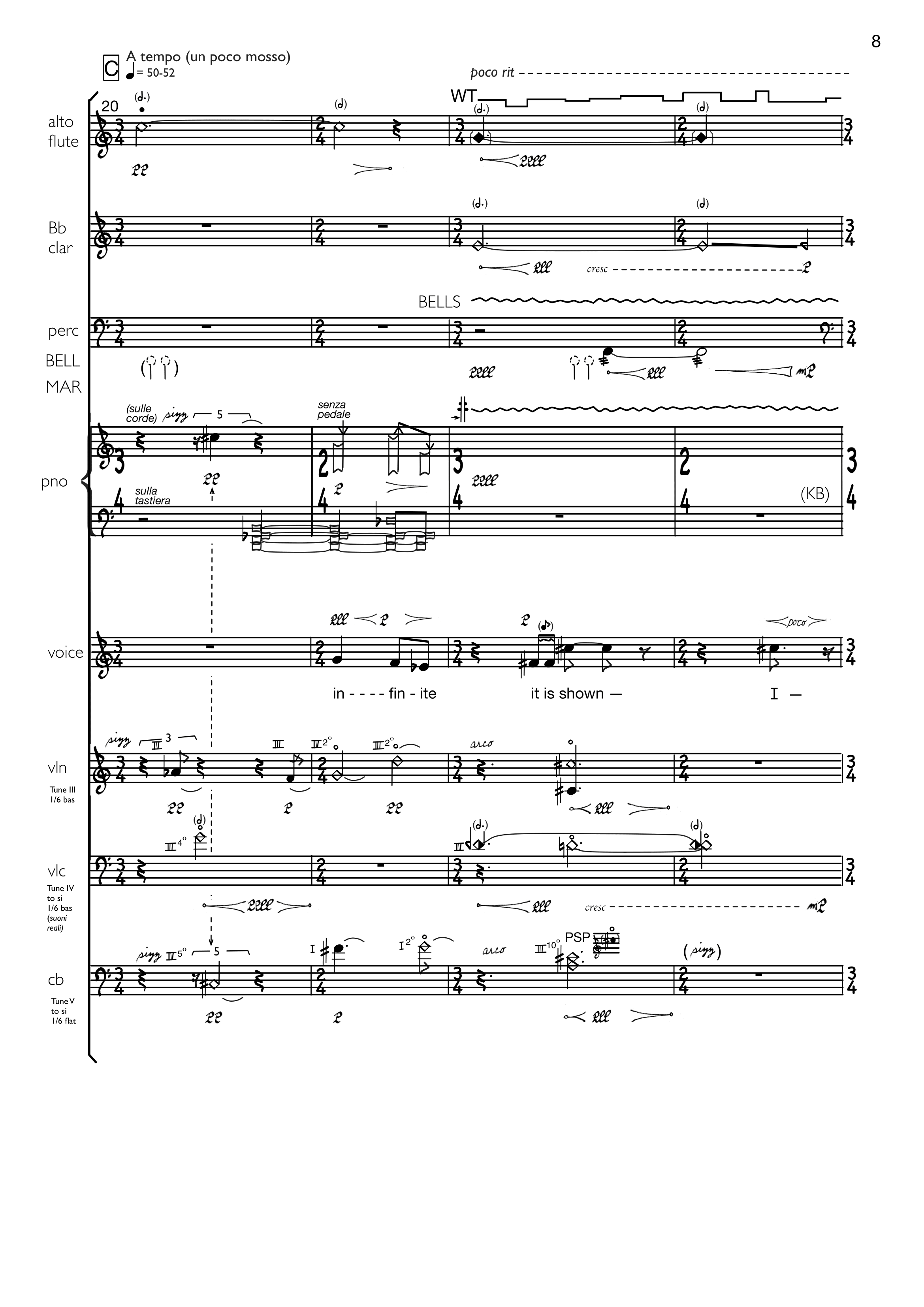

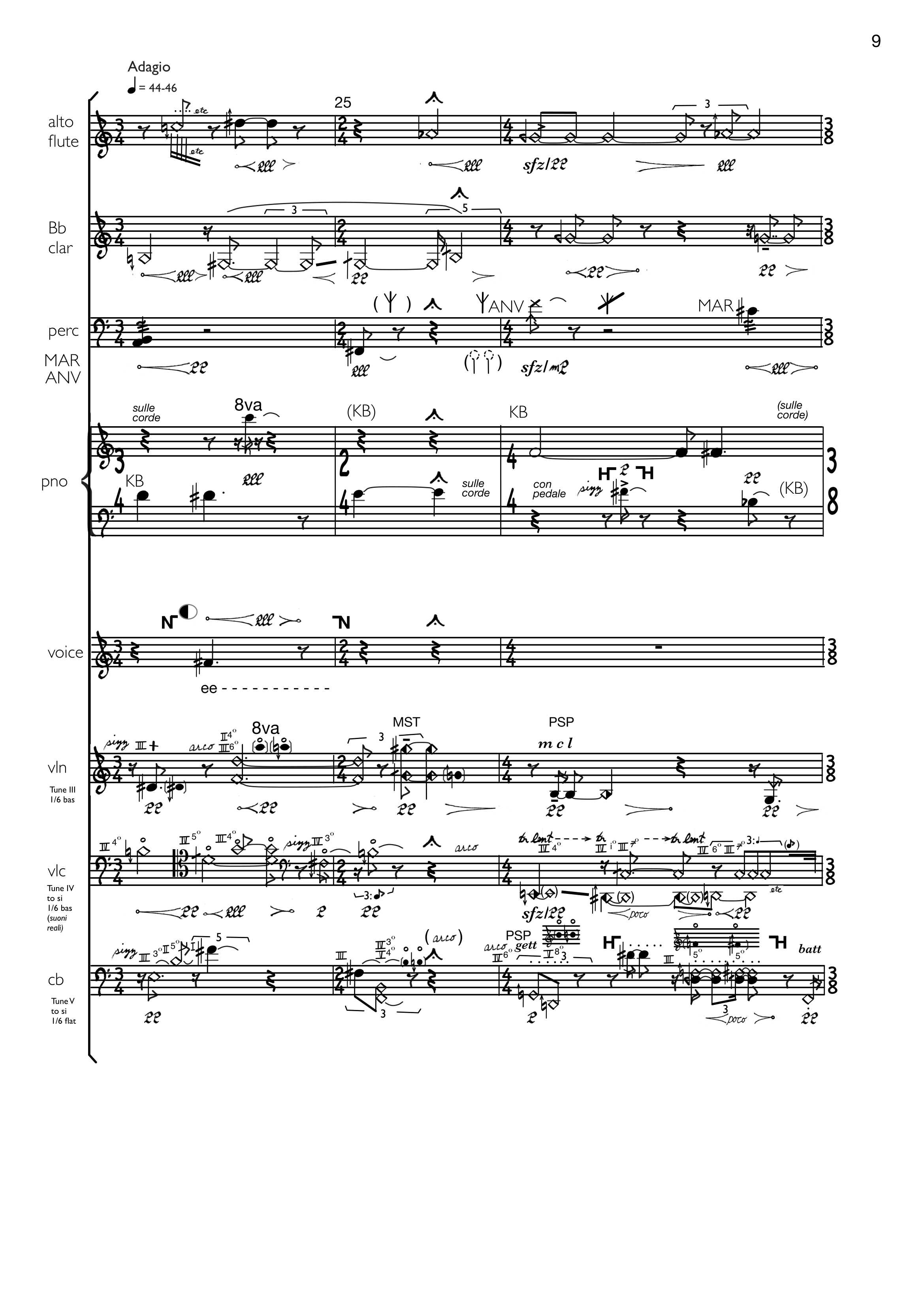

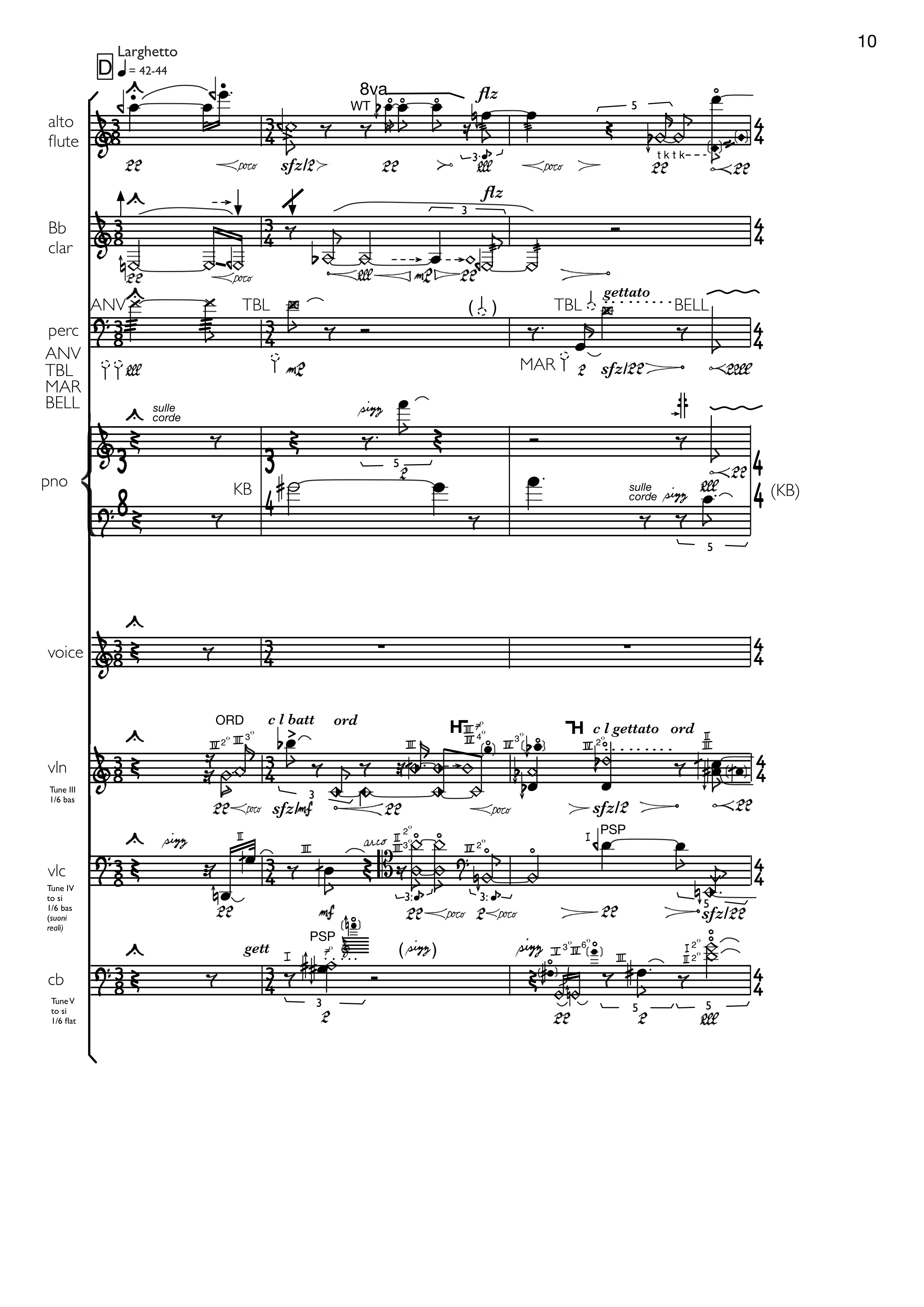

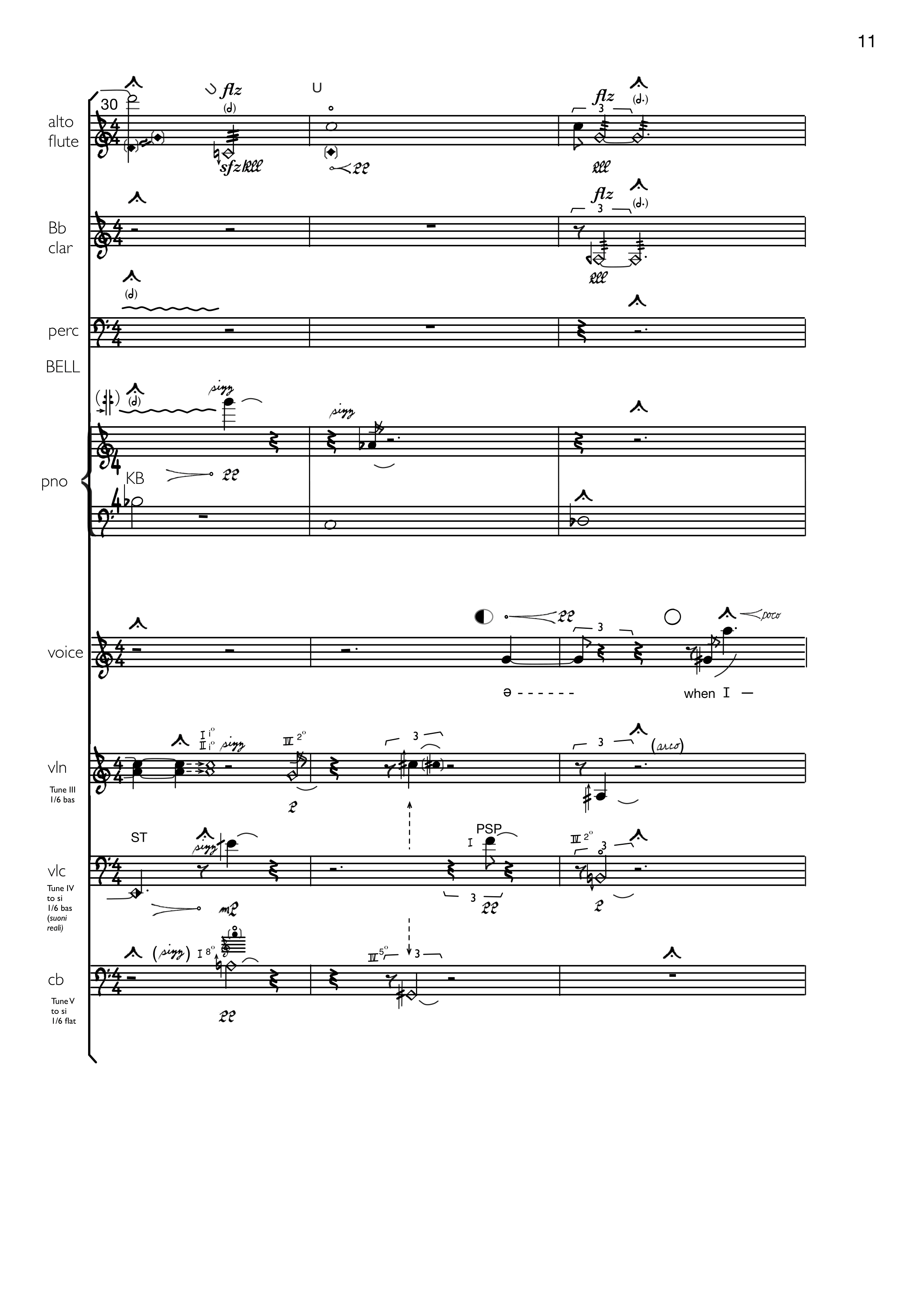

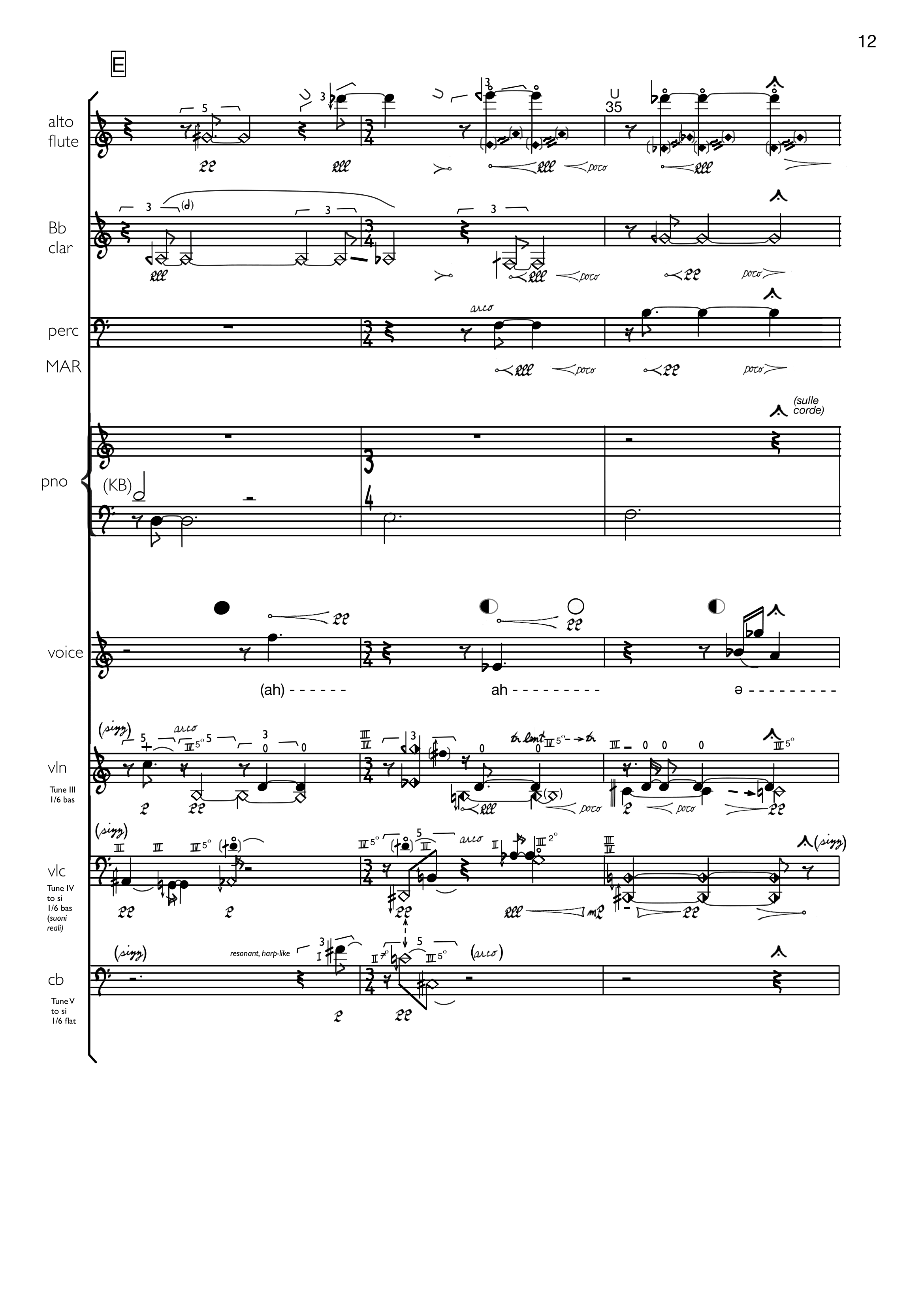

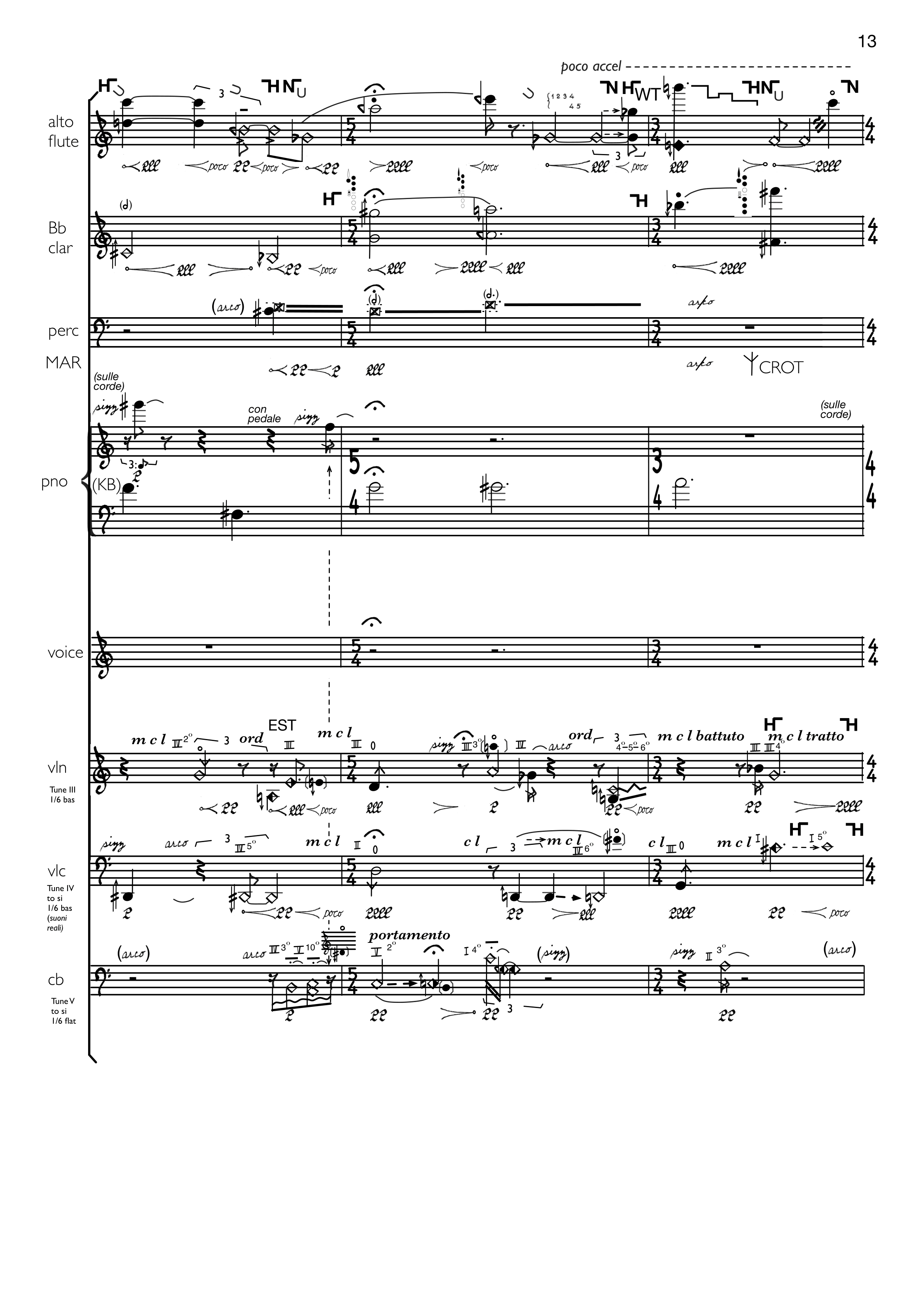

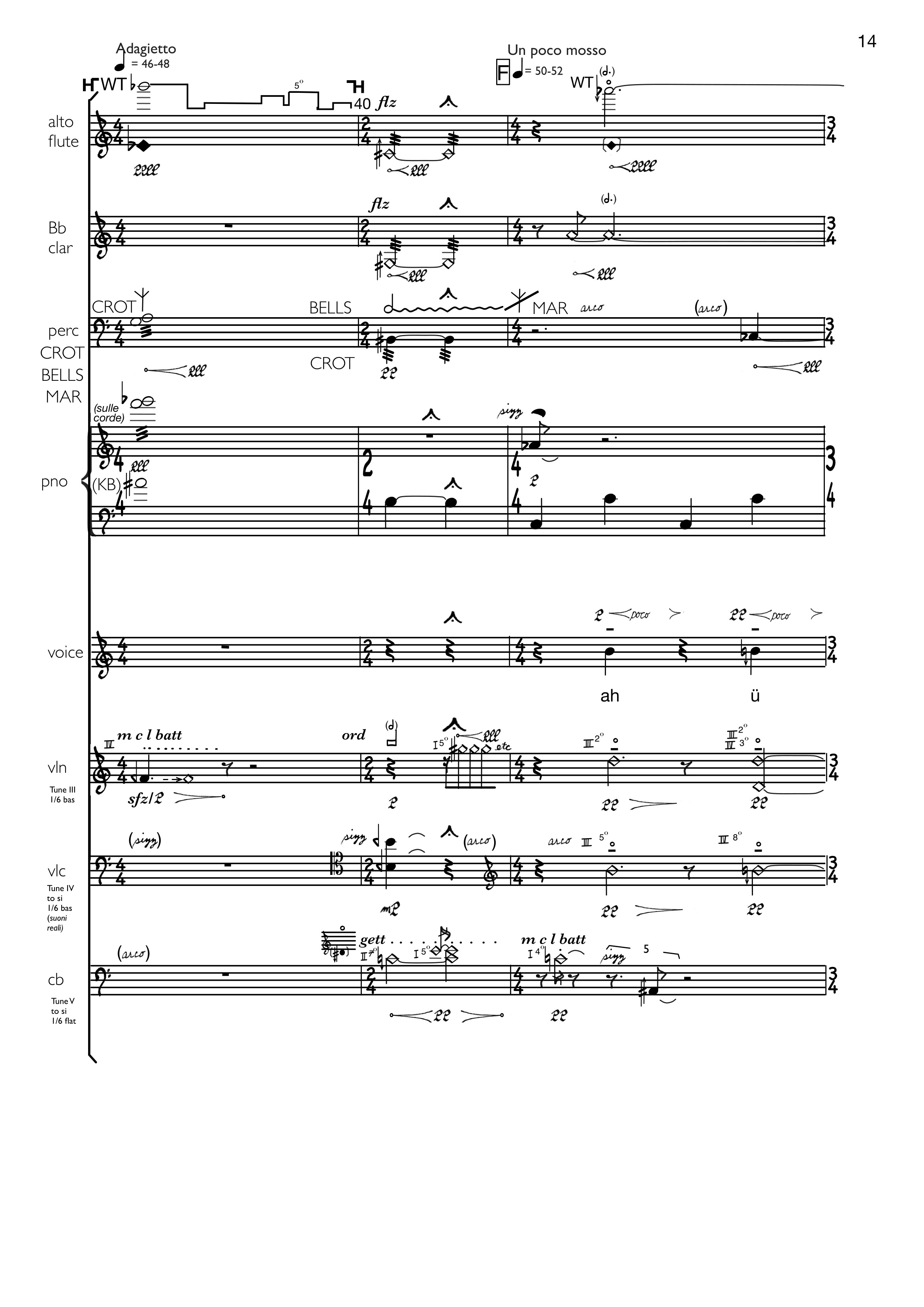

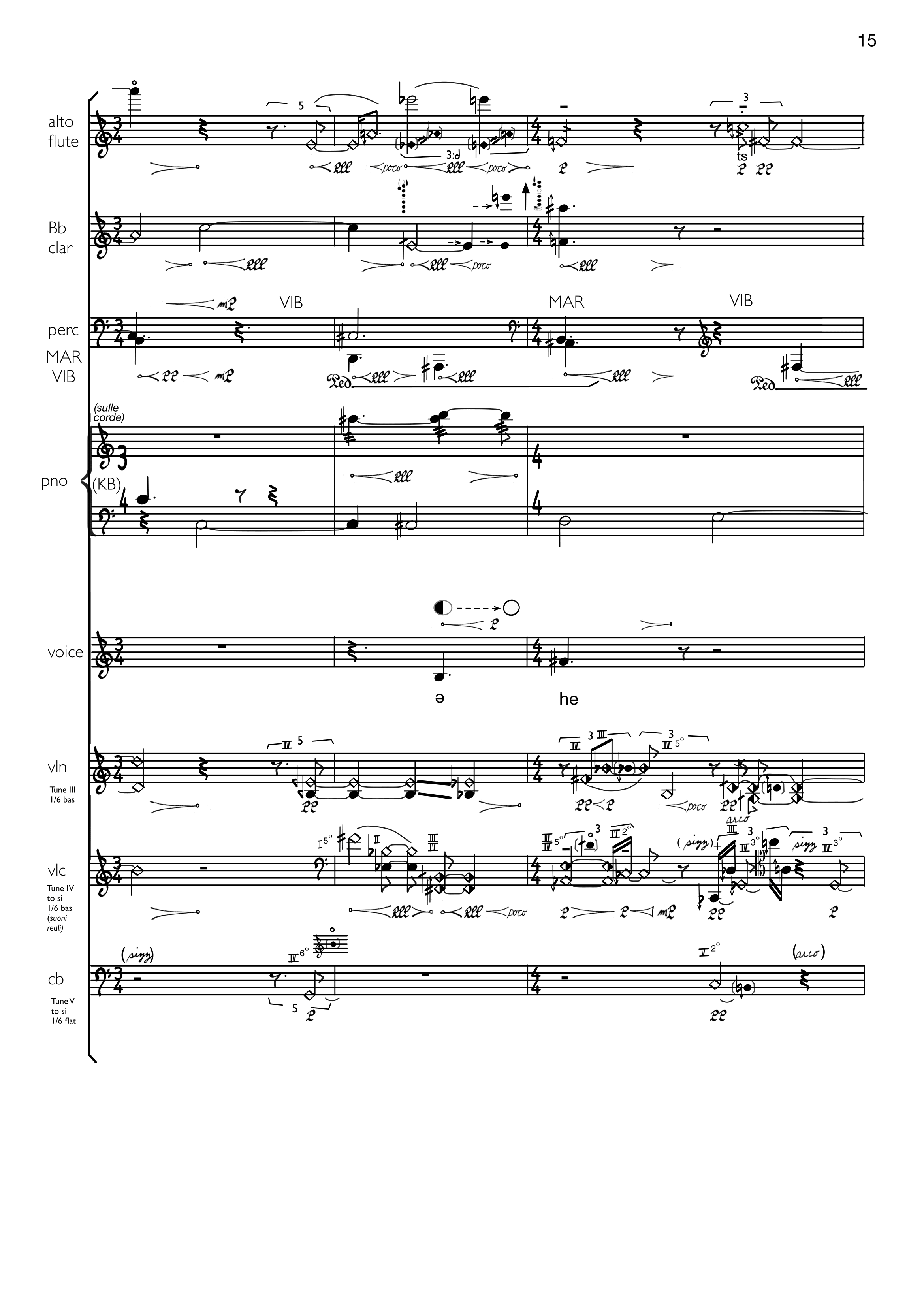

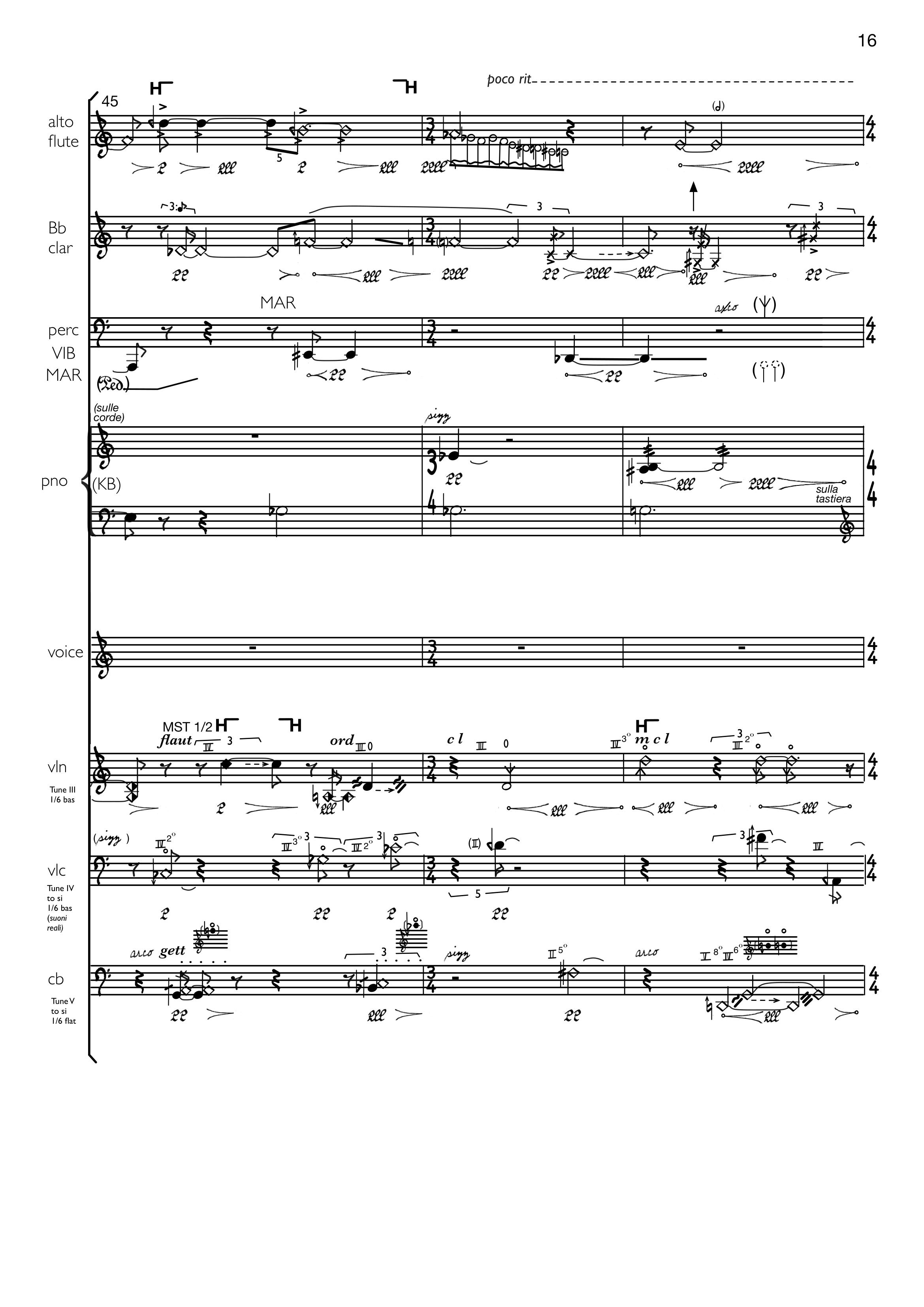

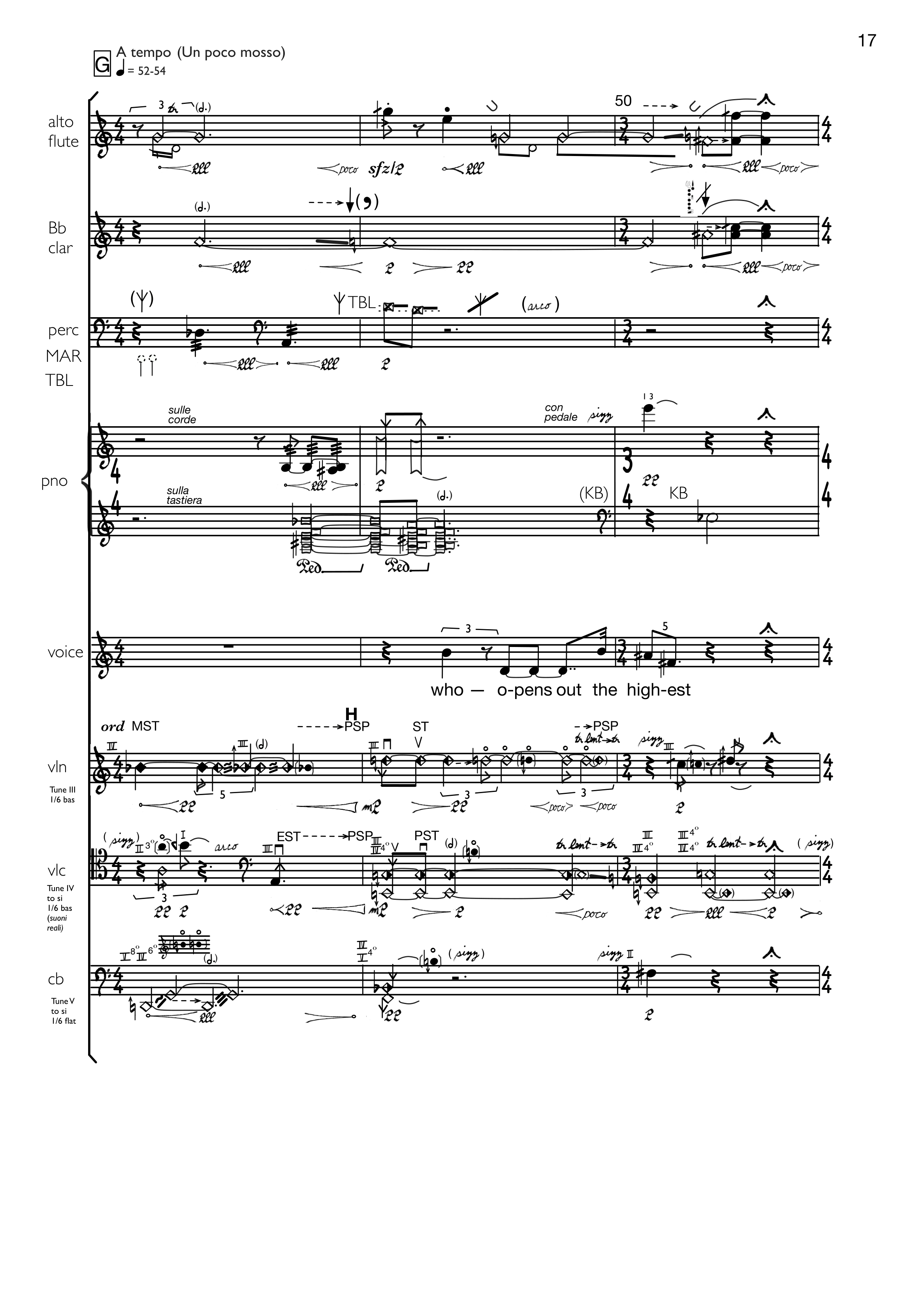

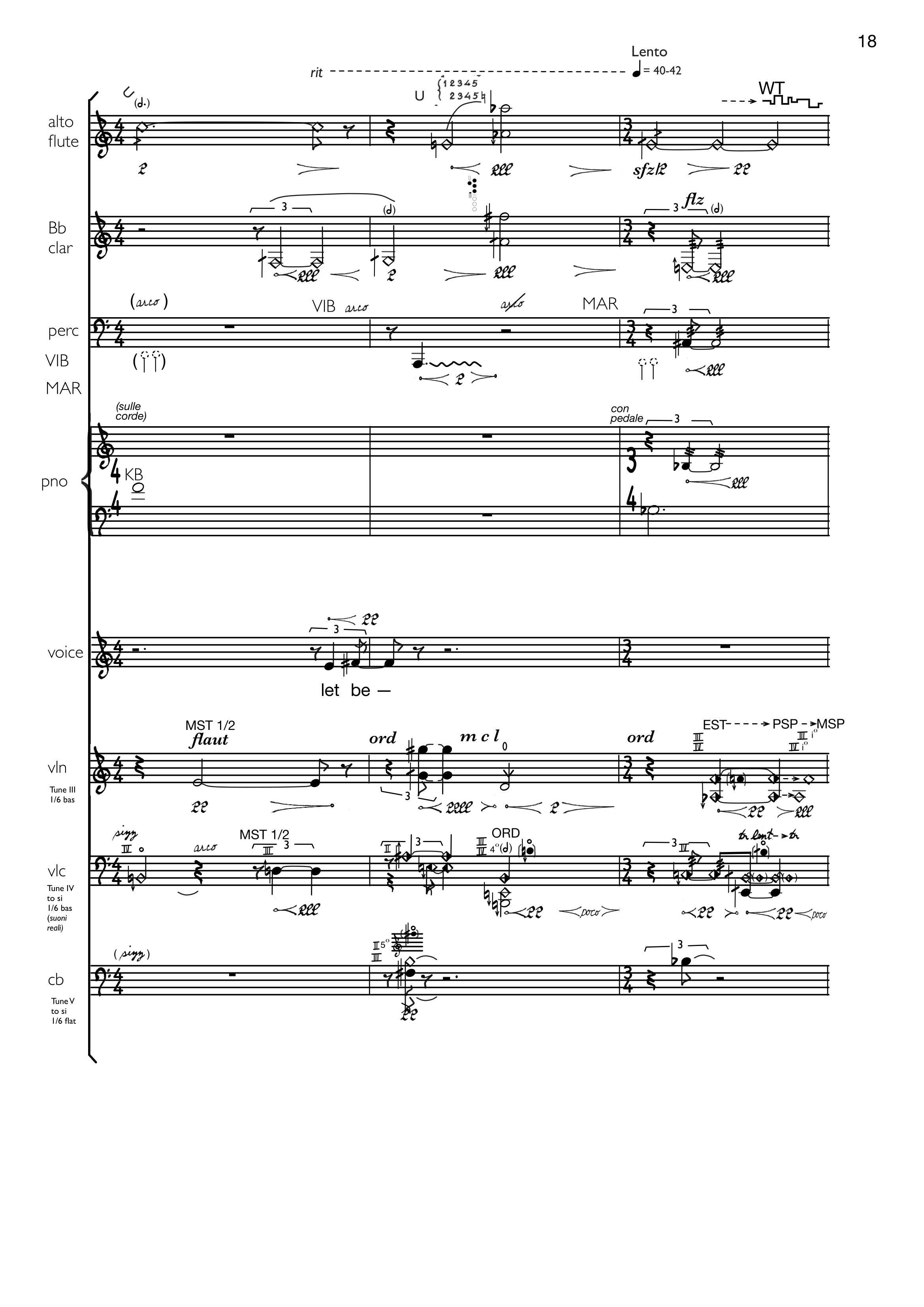

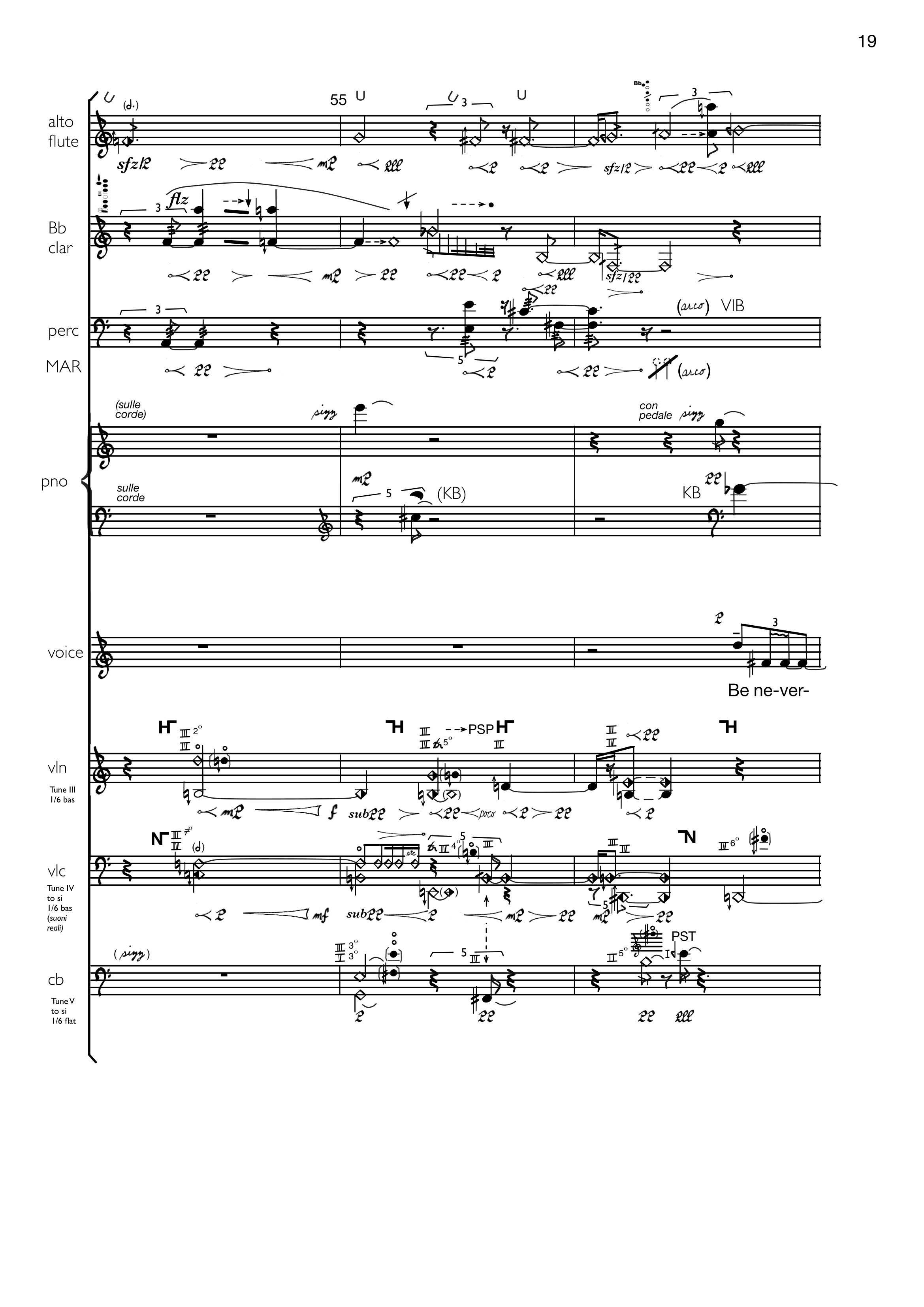

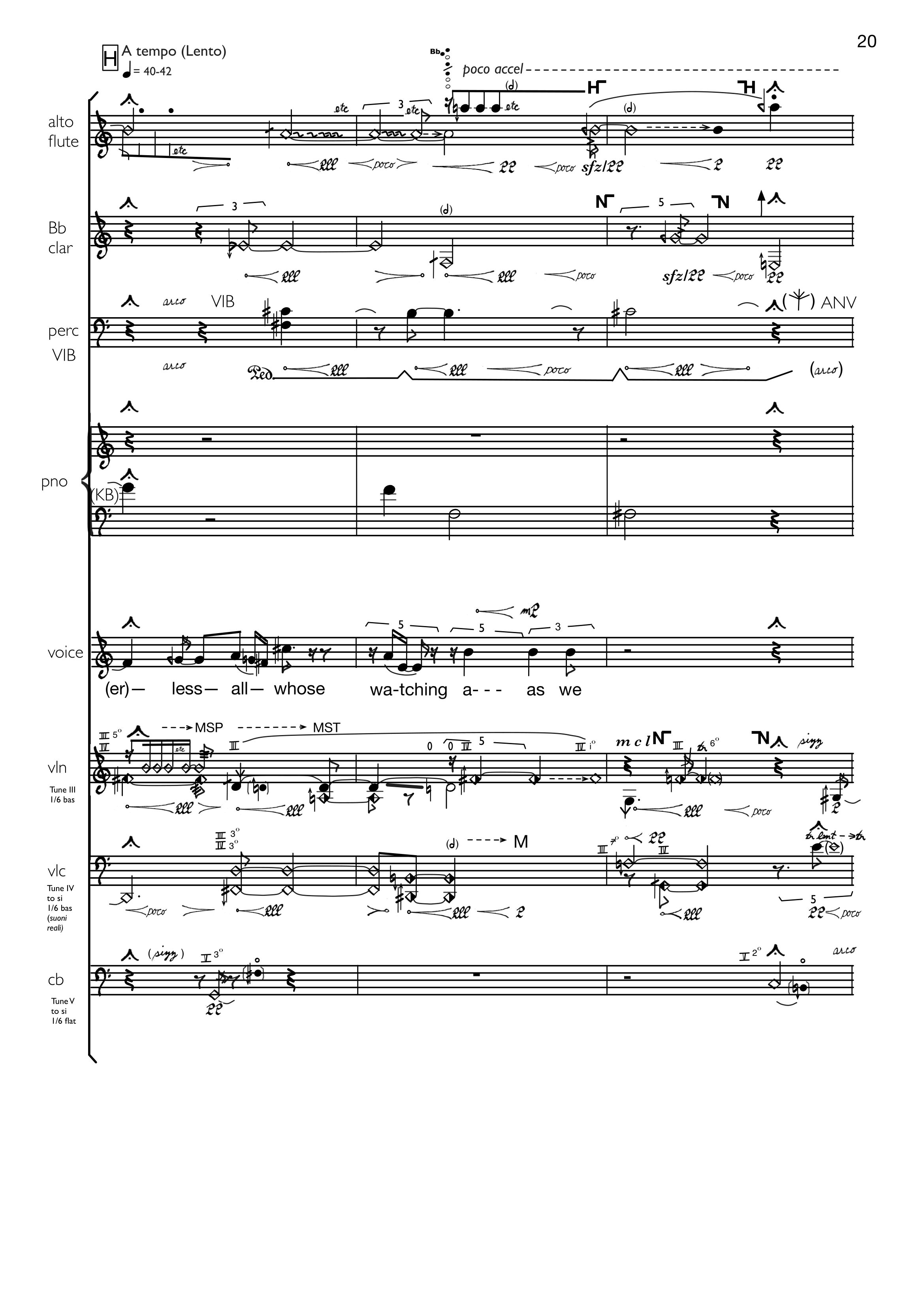

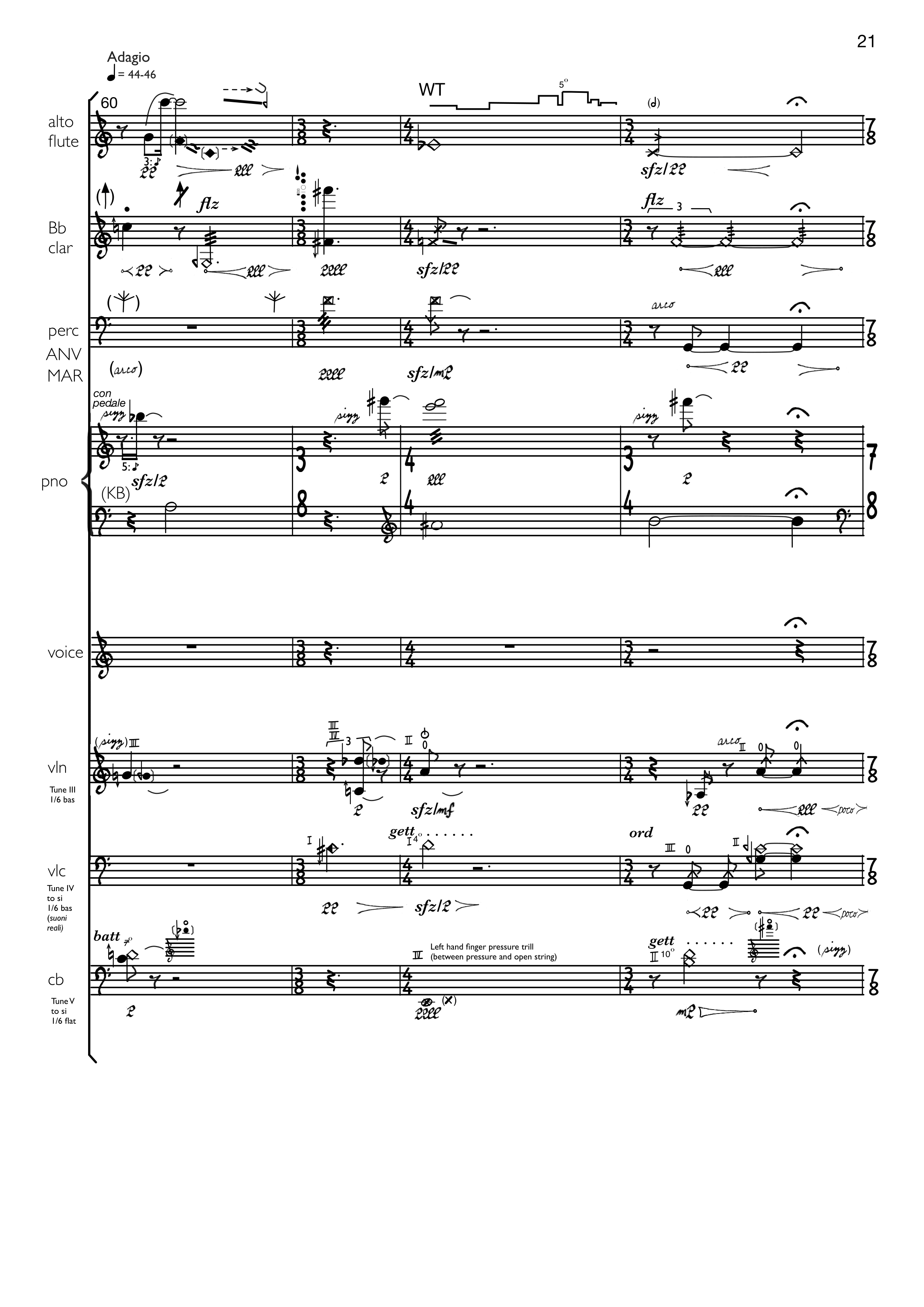

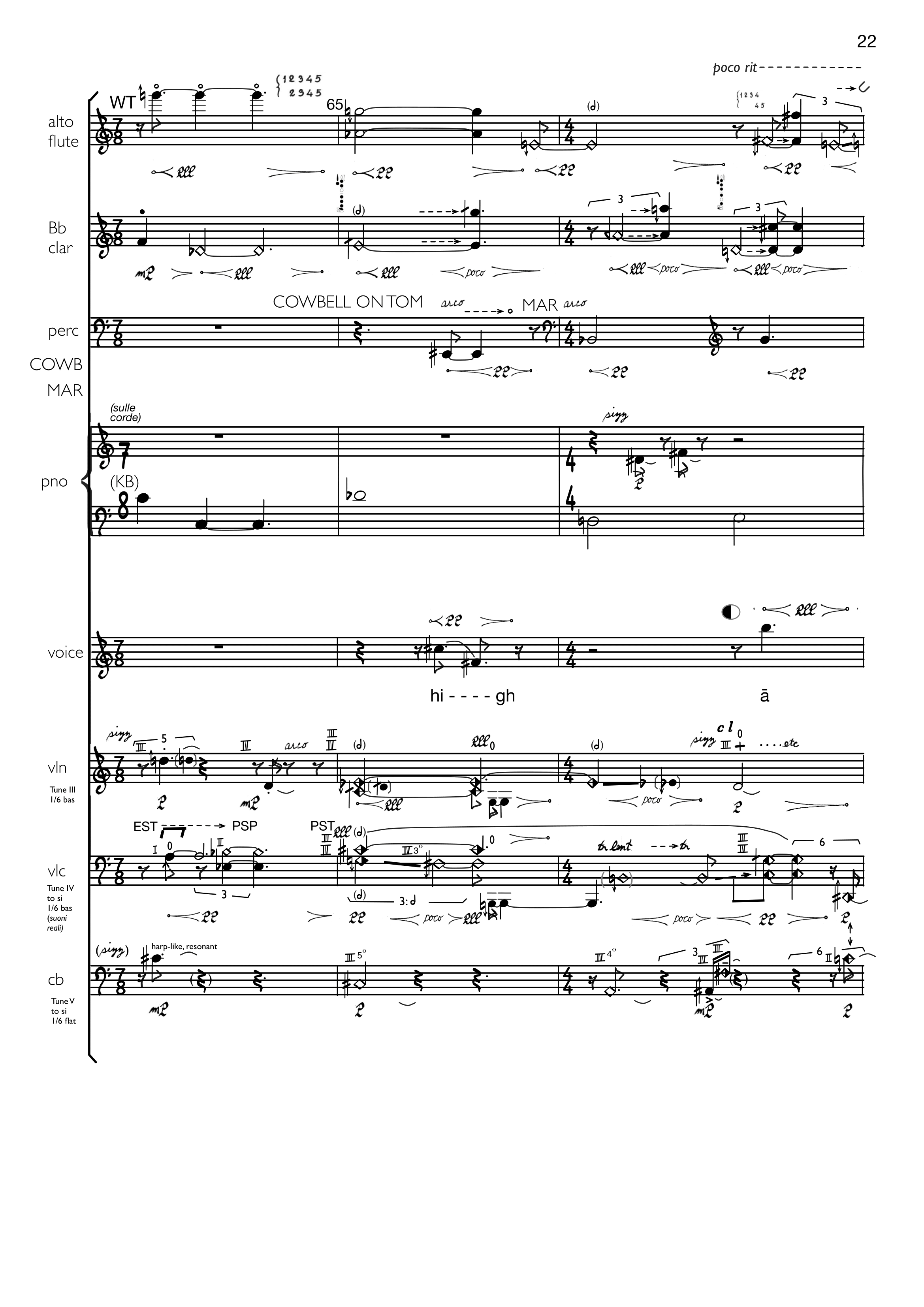

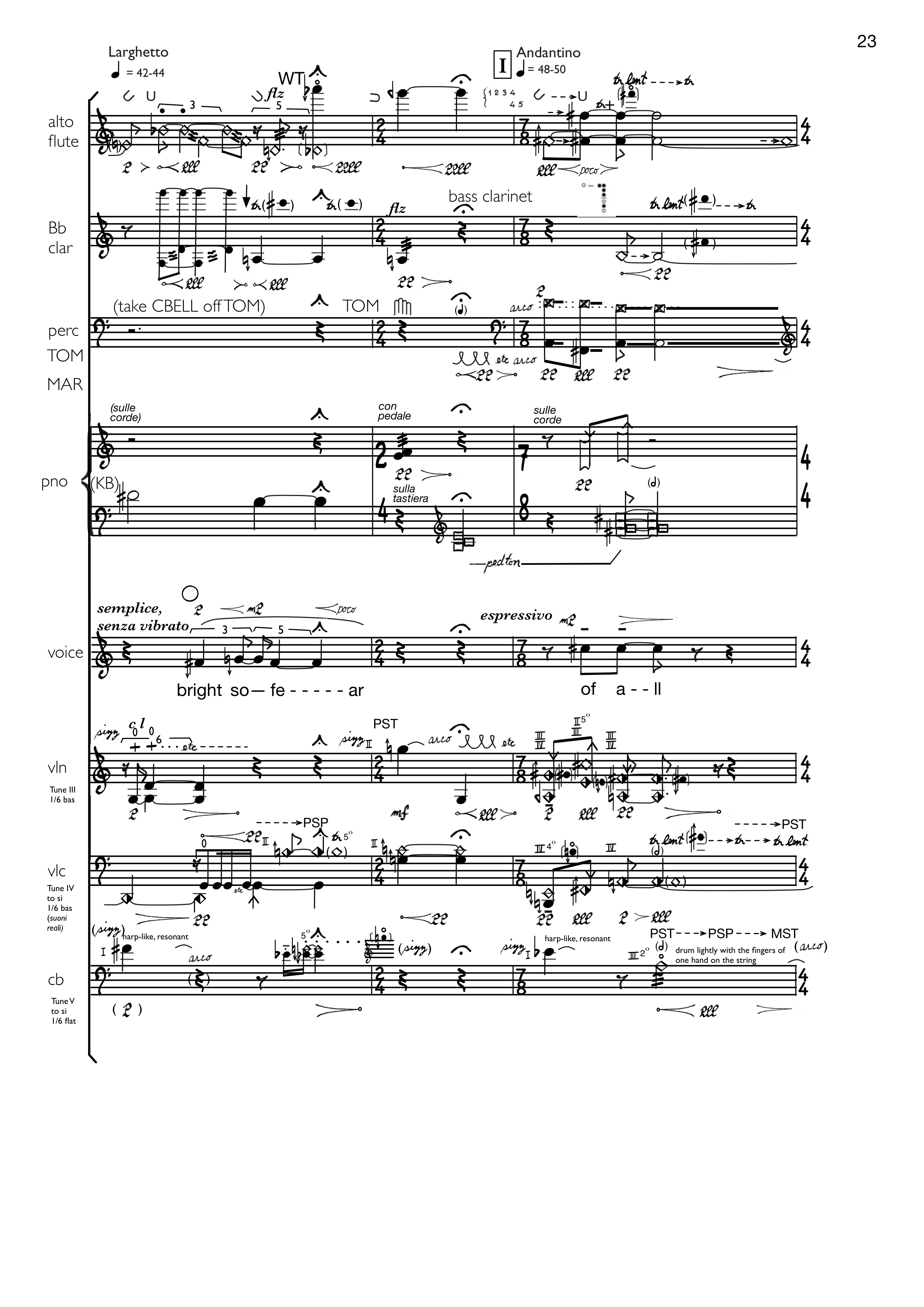

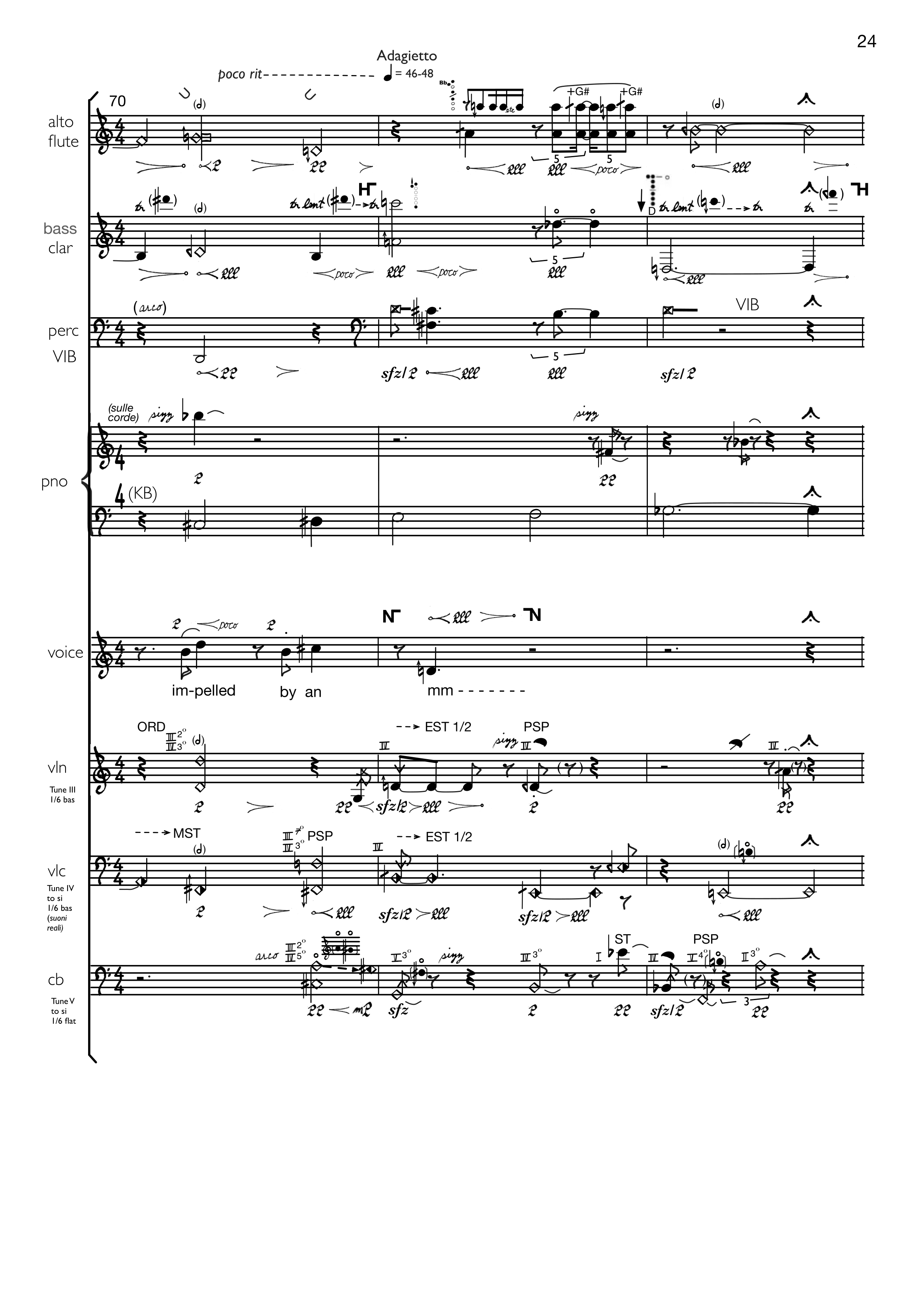

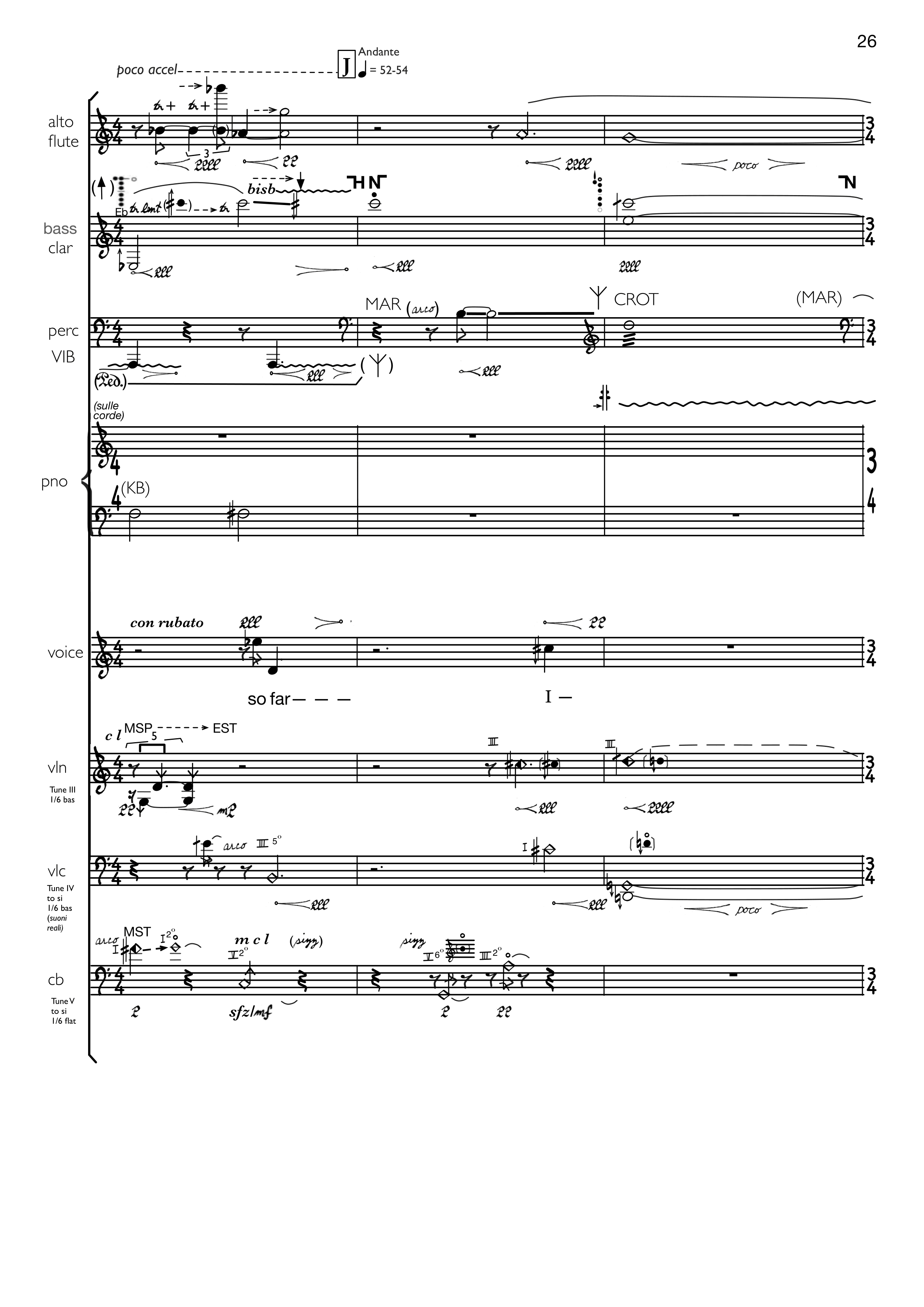

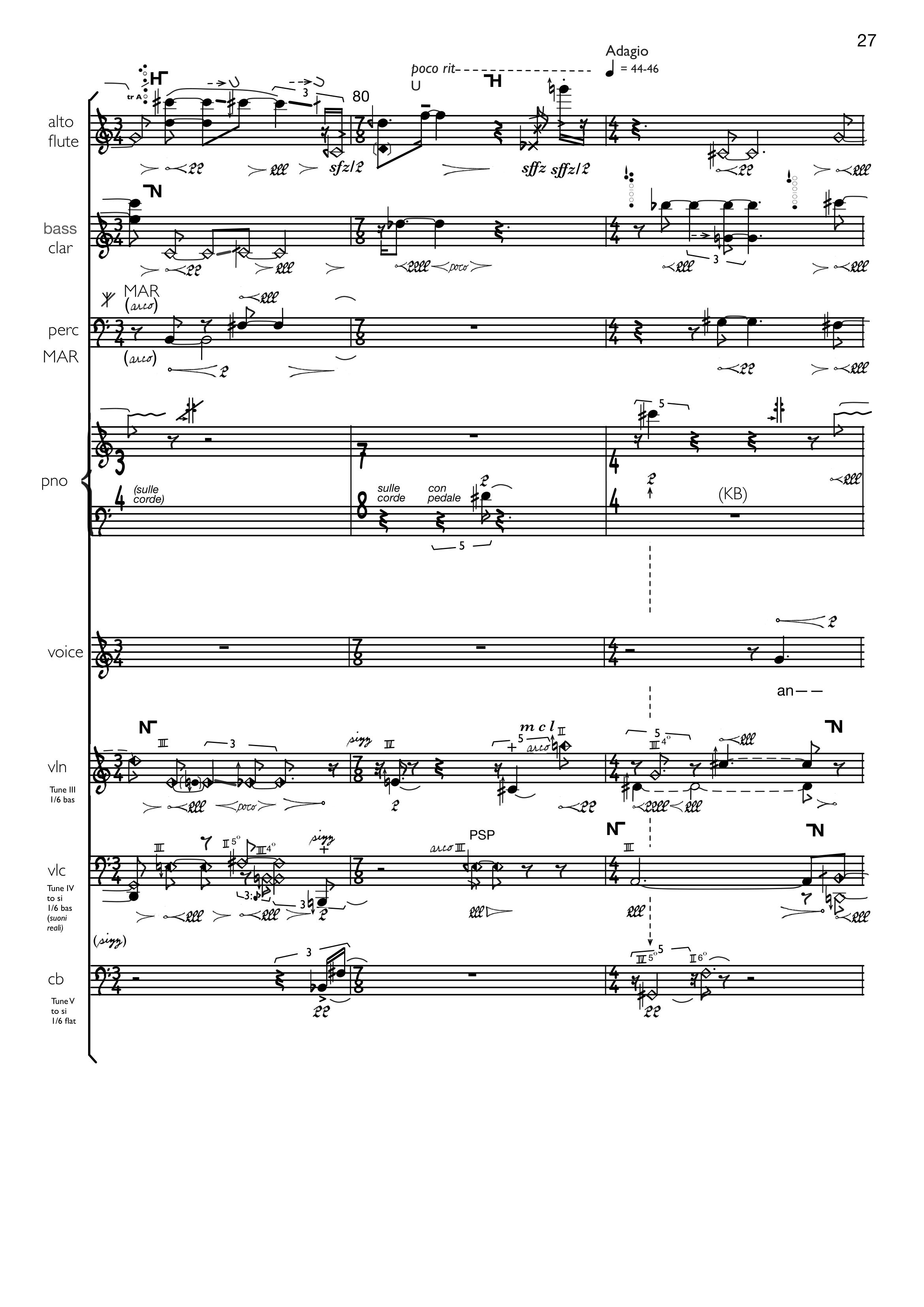

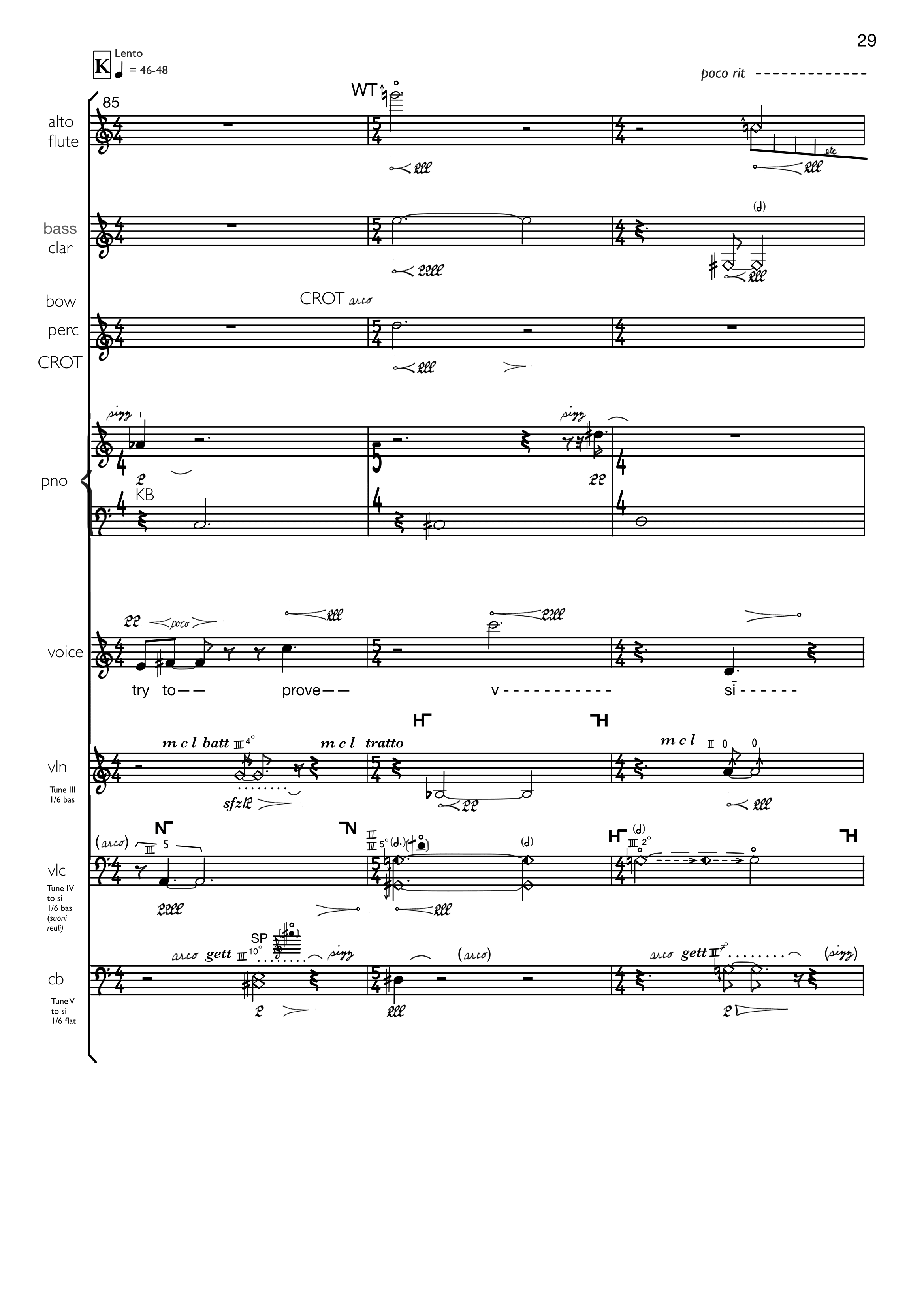

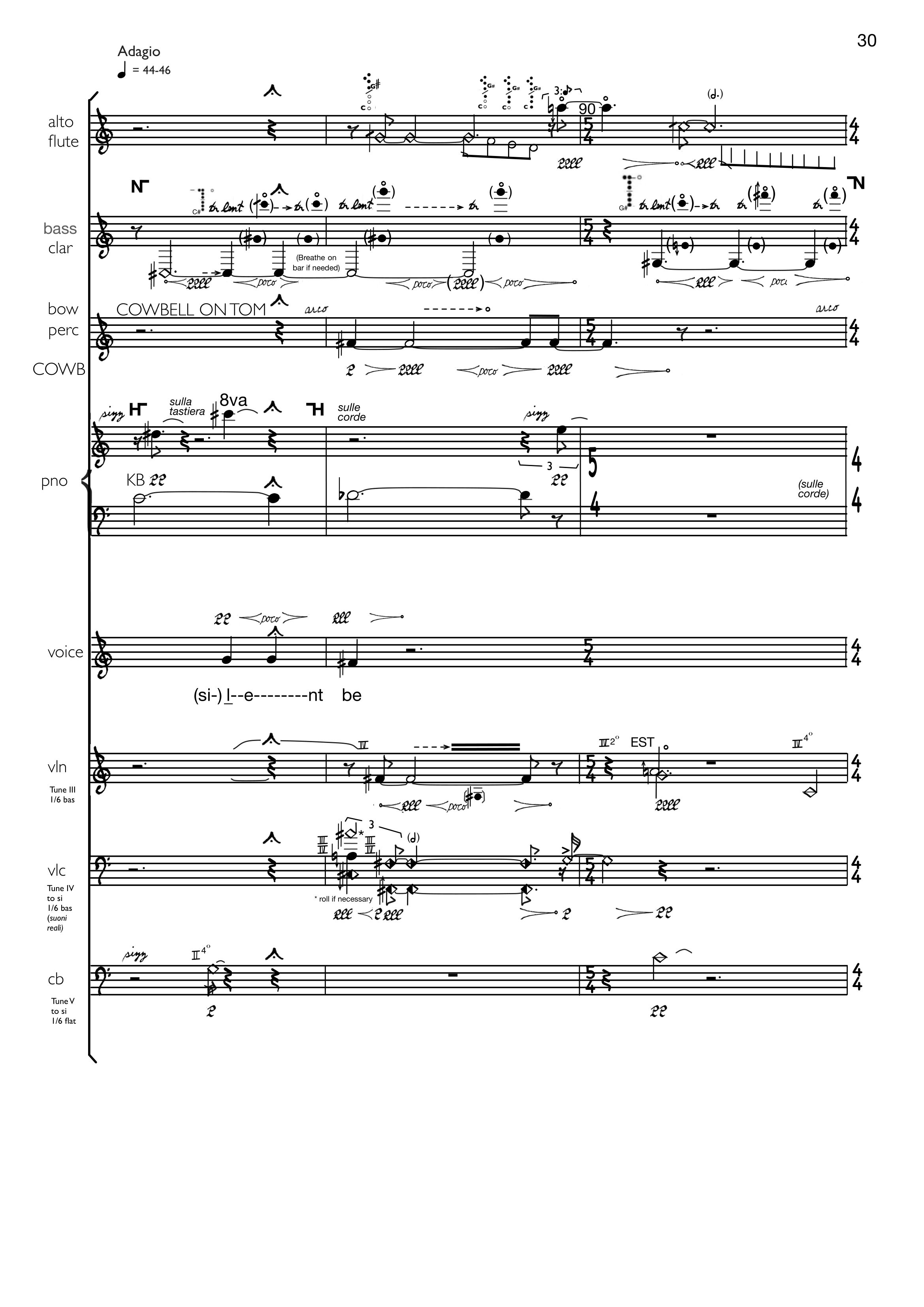

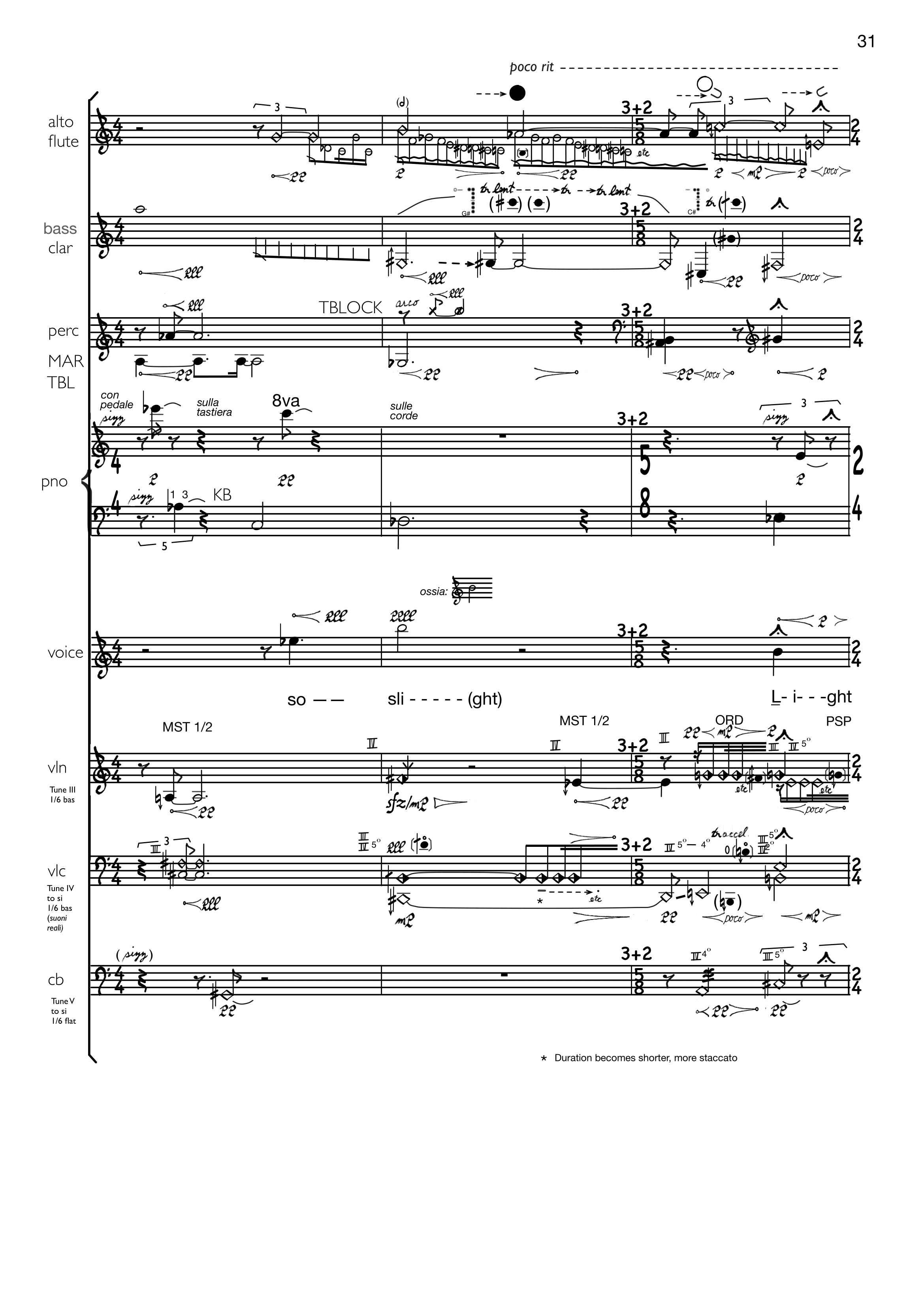

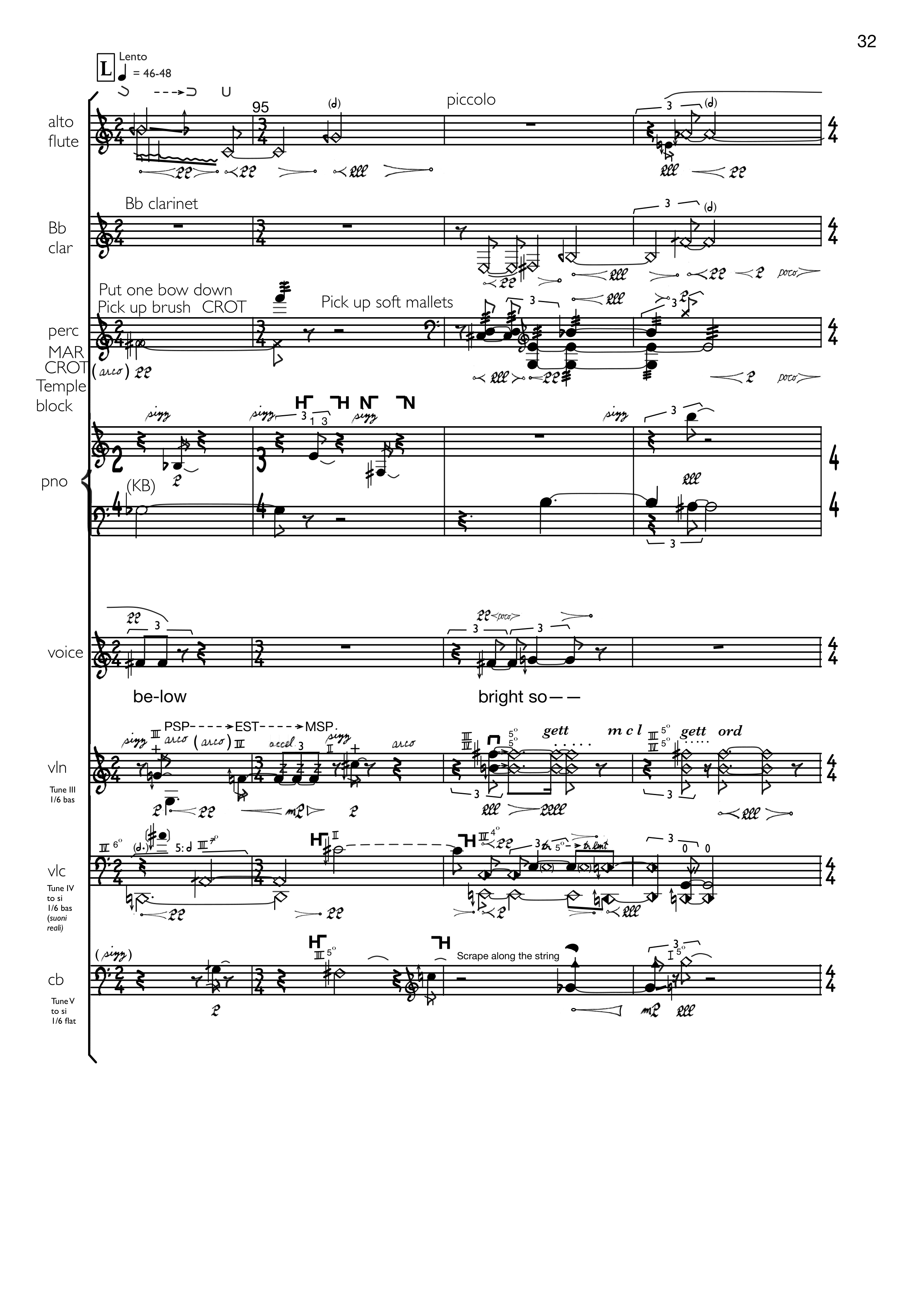

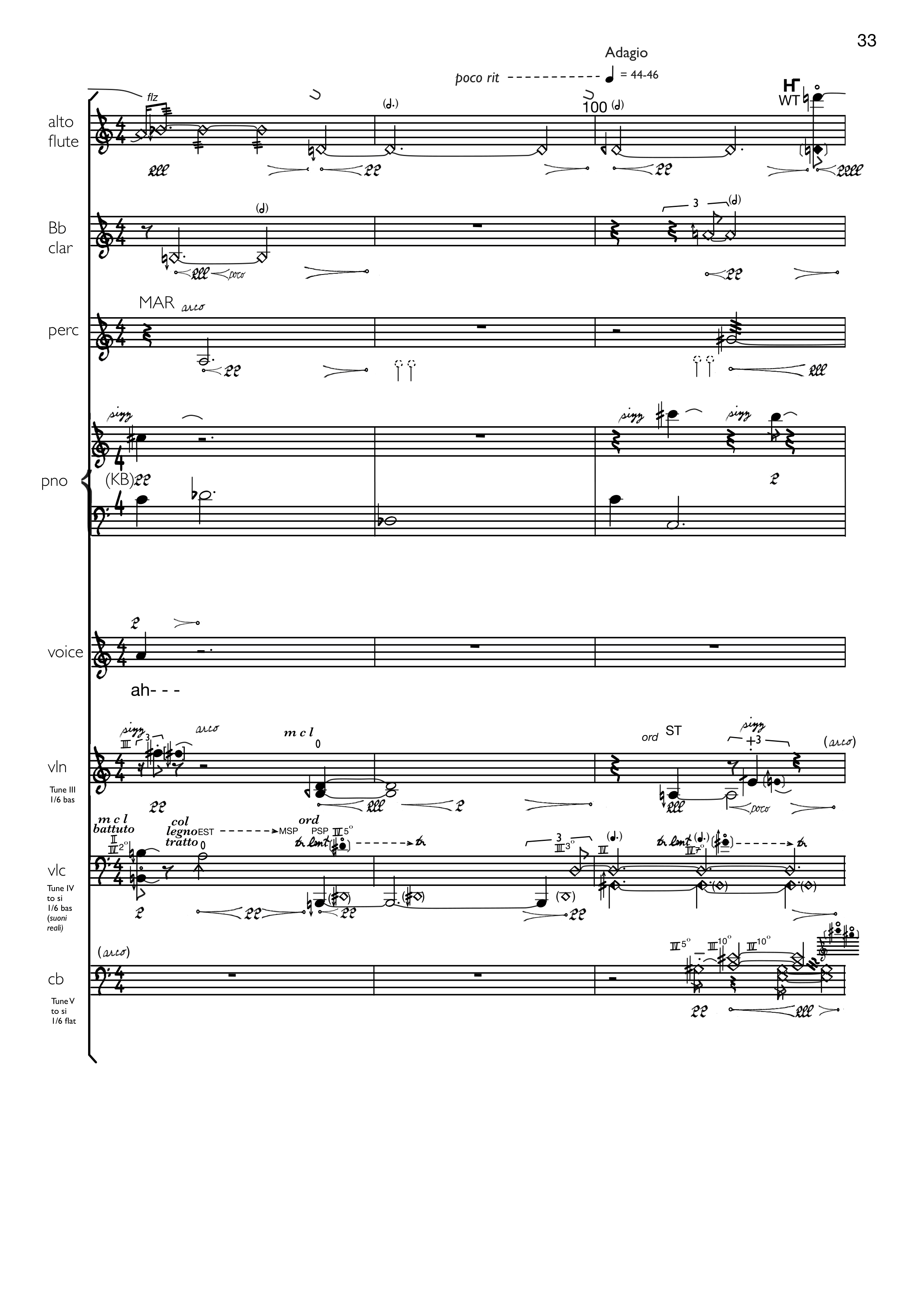

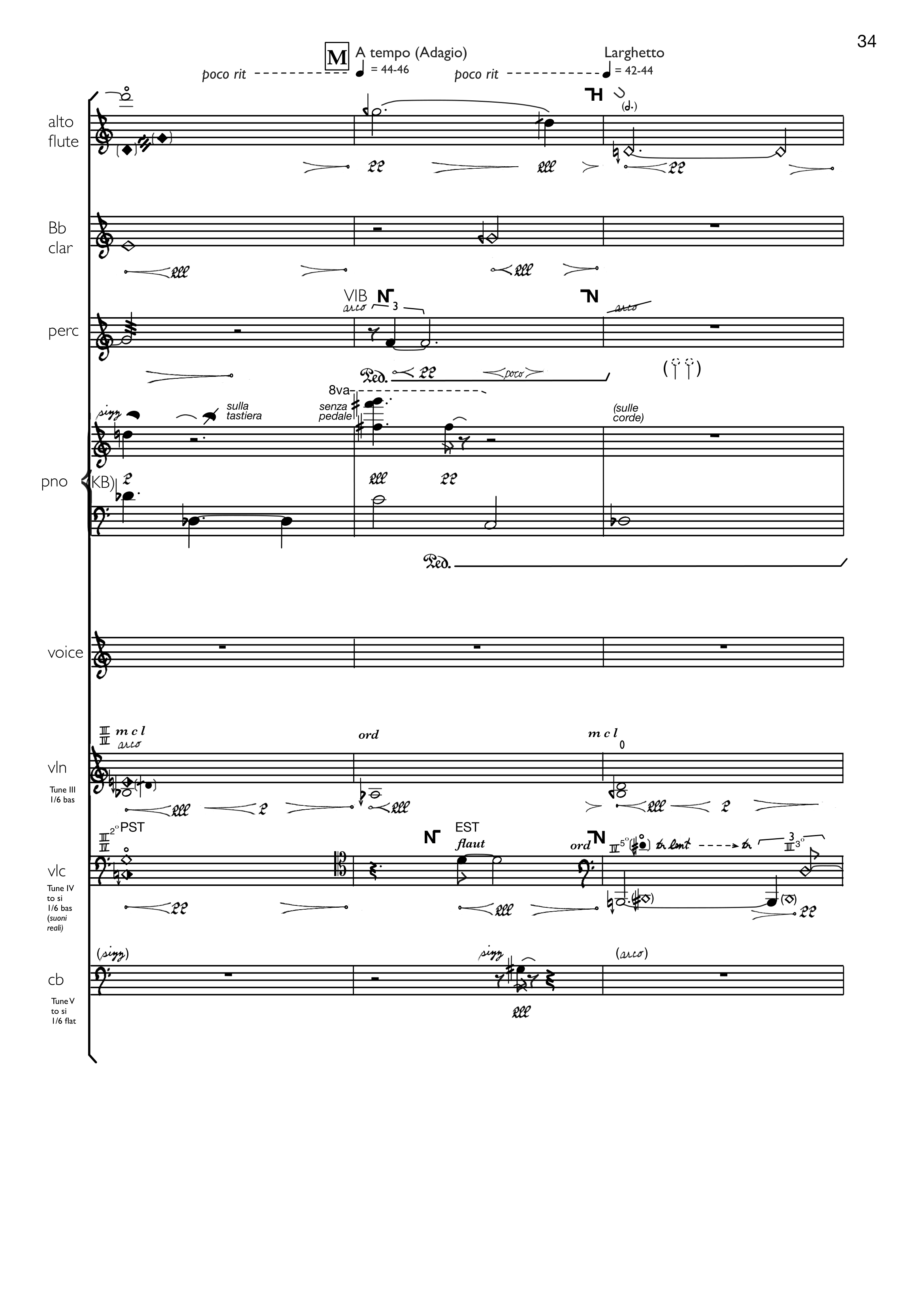

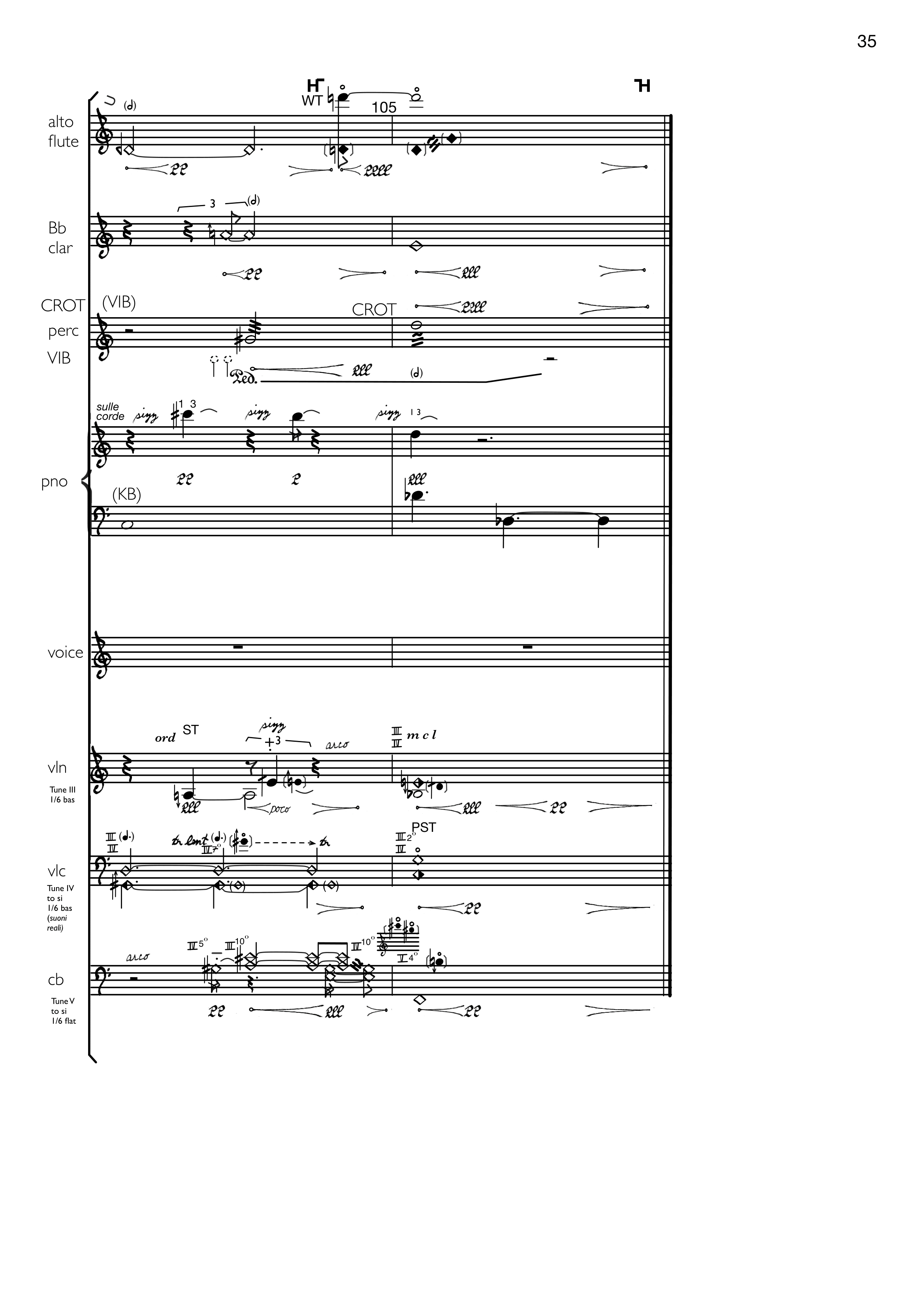

Scroll through score

TEXT

ea-each one is—

a he-lix —

a-

he-lix ver- tice

Oc-cam’s out

diff-

co’s

text up n- - - - - - - nd

——

lean to

[e ]

ah ee

of

[e ]

roots

I found most things

watch be- low

our- selves — who —

in - - - - fin - ite

it is shown —

I —

ee - - - - - - - - - - -

——

[e ]

when I —

(ah) - - - - - -

ah - - - - - - - - -

[e ]- - - - - - - - -

ah ü

[e ] he

——

who — o-pens out the high-est

let be —

Be ne-ver- less—

all— whose wa-tching a- - - as we

——

hi - - - - gh

a-

bright so— fe - - - - - ar

of a - - ll

im-pelled by an mm - - - - - - -

bright so fa- air—

so far— — — I—

an—— an - swer

try to—— prove——

v - - - - - - - - - - -

si- - - - - - - l--e--------nt be

so —— sli - - - - - (ght) L- i- - -ght be-low

bright so—— ah- - -